Now is exactly the wrong moment to be reviewing the state of photonic quantum computing — the field is moving so rapidly, at just this moment, that everything I say here now will probably be out of date in just a few years. On the other hand, now is exactly the right time to be doing this review, because so much has happened in just the past few years, that it is important to take a moment and look at where this field is today and where it will be going.

At the 20-year anniversary of the publication of my book Mind at Light Speed (Free Press, 2001), this blog is the third in a series reviewing progress in three generations of Machines of Light over the past 20 years (see my previous blogs on the future of the photonic internet and on all-optical computers). This third and final update reviews progress on the third generation of the Machines of Light: the Quantum Optical Generation. Of the three generations, this is the one that is changing the fastest.

Quantum computing is almost here … and it will be at room temperature, using light, in photonic integrated circuits!

Quantum Computing with Linear Optics

Twenty years ago in 2001, Emanuel Knill and Raymond LaFlamme at Los Alamos National Lab, with Gerald Mulburn at the University of Queensland, Australia, published a revolutionary theoretical paper (known as KLM) in Nature on quantum computing with linear optics: “A scheme for efficient quantum computation with linear optics” [1]. Up until that time, it was believed that a quantum computer — if it was going to have the property of a universal Turing machine — needed to have at least some nonlinear interactions among qubits in a quantum gate. For instance, an example of a two-qubit gate is a controlled-NOT, or CNOT, gate shown in Fig. 1 with the Truth Table and the equivalent unitary matrix. It clear that one qubit is controlling the other, telling it what to do.

The quantum CNOT gate gets interesting when the control line has a quantum superposition, then the two outputs become entangled.

Entanglement is a strange process that is unique to quantum systems and has no classical analog. It also has no simple intuitive explanation. By any normal logic, if the control line passes through the gate unaltered, then absolutely nothing interesting should be happening on the Control-Out line. But that’s not the case. The control line going in was a separate state. If some measurement were made on it, either a 1 or 0 would be seen with equal probability. But coming out of the CNOT, the signal has somehow become perfectly correlated with whatever value is on the Signal-Out line. If the Signal-Out is measured, the measurement process collapses the state of the Control-Out to a value equal to the measured signal. The outcome of the control line becomes 100% certain even though nothing was ever done to it! This entanglement generation is one reason the CNOT is often the gate of choice when constructing quantum circuits to perform interesting quantum algorithms.

However, optical implementation of a CNOT is a problem, because light beams and photons really do not like to interact with each other. This is the problem with all-optical classical computers too (see my previous blog). There are ways of getting light to interact with light, for instance inside nonlinear optical materials. And in the case of quantum optics, a single atom in an optical cavity can interact with single photons in ways that can act like a CNOT or related gates. But the efficiencies are very low and the costs to implement it are very high, making it difficult or impossible to scale such systems up into whole networks needed to make a universal quantum computer.

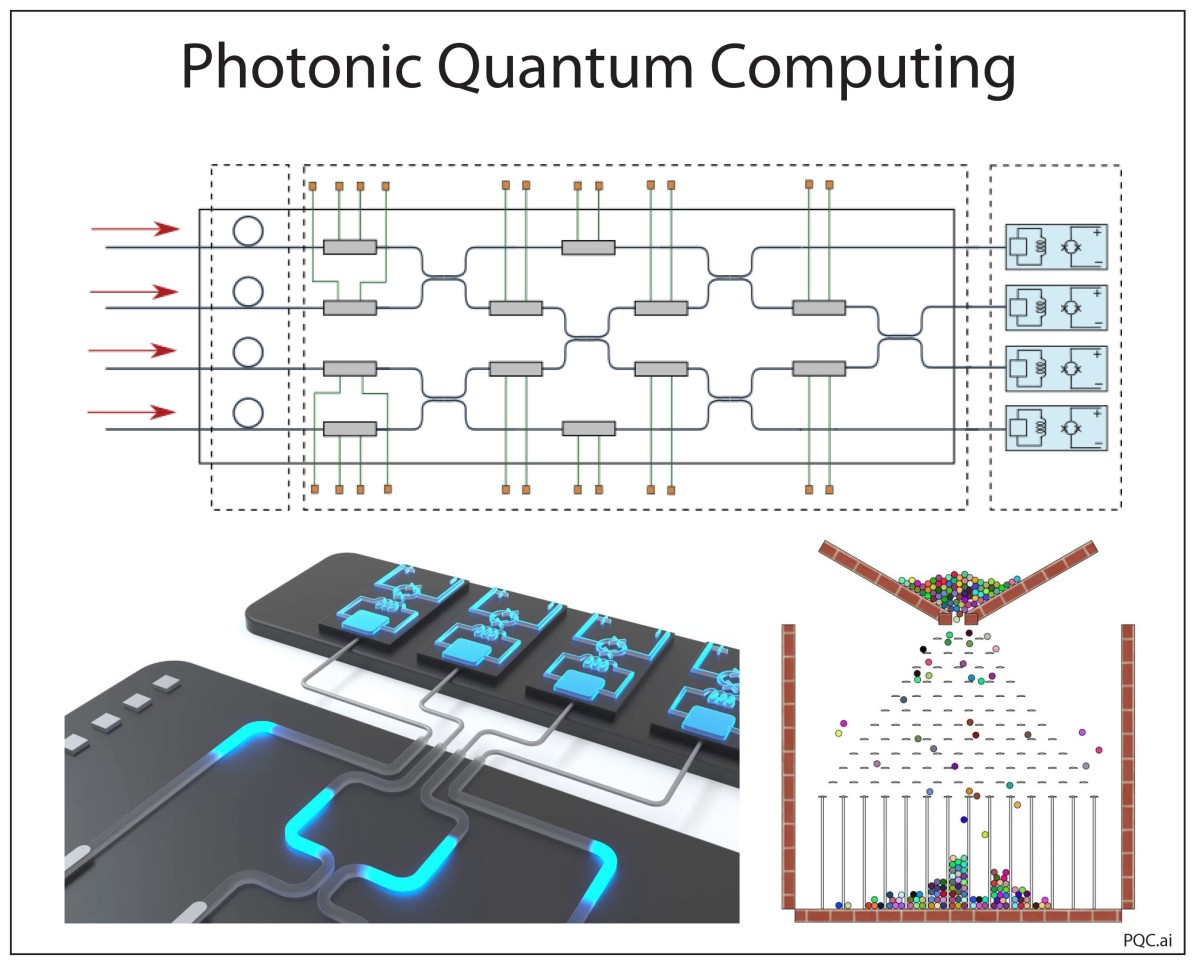

Therefore, when KLM published their idea for quantum computing with linear optics, it caused a shift in the way people were thinking about optical quantum computing. A universal optical quantum computer could be built using just light sources, beam splitters and photon detectors.

The way that KLM gets around the need for a direct nonlinear interaction between two photons is to use postselection. They run a set of photons — signal photons and ancilla (test) photons — through their linear optical system and they detect (i.e., theoretically…the paper is purely a theoretical proposal) the ancilla photons. If these photons are not detected where they are wanted, then that iteration of the computation is thrown out, and it is tried again and again, until the photons end up where they need to be. When the ancilla outcomes are finally what they need to be, this run is selected because the signal state are known to have undergone a known transformation. The signal photons are still unmeasured at this point and are therefore in quantum superpositions that are useful for quantum computation. Postselection uses entanglement and measurement collapse to put the signal photons into desired quantum states. Postselection provides an effective nonlinearity that is induced by the wavefunction collapse of the entangled state. Of course, the down side of this approach is that many iterations are thrown out — the computation becomes non-deterministic.

KLM could get around most of the non-determinism by using more and more ancilla photons, but this has the cost of blowing up the size and cost of the implementation, so their scheme was not imminently practical. But the important point was that it introduced the idea of linear quantum computing. (For this, Milburn and his collaborators have my vote for a future Nobel Prize.) Once that idea was out, others refined it, and improved upon it, and found clever ways to make it more efficient and more scalable. Many of these ideas relied on a technology that was co-evolving with quantum computing — photonic integrated circuits (PICs).

Quantum Photonic Integrated Circuits (QPICs)

Never underestimate the power of silicon. The amount of time and energy and resources that have now been invested in silicon device fabrication is so astronomical that almost nothing in this world can displace it as the dominant technology of the present day and the future. Therefore, when a photon can do something better than an electron, you can guess that eventually that photon will be encased in a silicon chip–on a photonic integrated circuit (PIC).

The dream of integrated optics (the optical analog of integrated electronics) has been around for decades, where waveguides take the place of conducting wires, and interferometers take the place of transistors — all miniaturized and fabricated in the thousands on silicon wafers. The advantages of PICs are obvious, but it has taken a long time to develop. When I was a post-doc at Bell Labs in the late 1980’s, everyone was talking about PICs, but they had terrible fabrication challenges and terrible attenuation losses. Fortunately, these are just technical problems, not limited by any fundamental laws of physics, so time (and an army of researchers) has chipped away at them.

One of the driving forces behind the maturation of PIC technology is photonic fiber optic communications (as discussed in a previous blog). Photons are clear winners when it comes to long-distance communications. In that sense, photonic information technology is a close cousin to silicon — photons are no less likely to be replaced by a future technology than silicon is. Therefore, it made sense to bring the photons onto the silicon chips, tapping into the full array of silicon fab resources so that there could be seamless integration between fiber optics doing the communications and the photonic chips directing the information. Admittedly, photonic chips are not yet all-optical. They still use electronics to control the optical devices on the chip, but this niche for photonics has provided a driving force for advancements in PIC fabrication.

One side-effect of improved PIC fabrication is low light losses. In telecommunications, this loss is not so critical because the systems use OEO regeneration. But less loss is always good, and the PICs can now safeguard almost every photon that comes on chip — exactly what is needed for a quantum PIC. In a quantum photonic circuit, every photon is valuable and informative and needs to be protected. The new PIC fabrication can do this. In addition, light switches for telecom applications are built from integrated interferometers on the chip. It turns out that interferometers at the single-photon level are unitary quantum gates that can be used to build universal photonic quantum computers. So the same technology and control that was used for telecom is just what is needed for photonic quantum computers. In addition, integrated optical cavities on the PICs, which look just like wavelength filters when used for classical optics, are perfect for producing quantum states of light known as squeezed light that turn out to be valuable for certain specialty types of quantum computing.

Therefore, as the concepts of linear optical quantum computing advanced through that last 20 years, the hardware to implement those concepts also advanced, driven by a highly lucrative market segment that provided the resources to tap into the vast miniaturization capabilities of silicon chip fabrication. Very fortuitous!

Room-Temperature Quantum Computers

There are many radically different ways to make a quantum computer. Some are built of superconducting circuits, others are made from semiconductors, or arrays of trapped ions, or nuclear spins on nuclei on atoms in molecules, and of course with photons. Up until about 5 years ago, optical quantum computers seemed like long shots. Perhaps the most advanced technology was the superconducting approach. Superconducting quantum interference devices (SQUIDS) have exquisite sensitivity that makes them robust quantum information devices. But the drawback is the cold temperatures that are needed for them to work. Many of the other approaches likewise need cold temperature–sometimes astronomically cold temperatures that are only a few thousandths of a degree above absolute zero Kelvin.

Cold temperatures and quantum computing seemed a foregone conclusion — you weren’t ever going to separate them — and for good reason. The single greatest threat to quantum information is decoherence — the draining away of the kind of quantum coherence that allows interferences and quantum algorithms to work. In this way, entanglement is a two-edged sword. On the one hand, entanglement provides one of the essential resources for the exponential speed-up of quantum algorithms. But on the other hand, if a qubit “sees” any environmental disturbance, then it becomes entangled with that environment. The entangling of quantum information with the environment causes the coherence to drain away — hence decoherence. Hot environments disturb quantum systems much more than cold environments, so there is a premium for cooling the environment of quantum computers to as low a temperature as they can. Even so, decoherence times can be microseconds to milliseconds under even the best conditions — quantum information dissipates almost as fast as you can make it.

Enter the photon! The bottom line is that photons don’t interact. They are blind to their environment. This is what makes them perfect information carriers down fiber optics. It is also what makes them such good qubits for carrying quantum information. You can prepare a photon in a quantum superposition just by sending it through a lossless polarizing crystal, and then the superposition will last for as long as you can let the photon travel (at the speed of light). Sometimes this means putting the photon into a coil of fiber many kilometers long to store it, but that is OK — a kilometer of coiled fiber in the lab is no bigger than a few tens of centimeters. So the same properties that make photons excellent at carrying information also gives them very small decoherence. And after the KLM schemes began to be developed, the non-interacting properties of photons were no longer a handicap.

In the past 5 years there has been an explosion, as well as an implosion, of quantum photonic computing advances. The implosion is the level of integration which puts more and more optical elements into smaller and smaller footprints on silicon PICs. The explosion is the number of first-of-a-kind demonstrations: the first universal optical quantum computer [2], the first programmable photonic quantum computer [3], and the first (true) quantum computational advantage [4].

All of these “firsts” operate at room temperature. (There is a slight caveat: The photon-number detectors are actually superconducting wire detectors that do need to be cooled. But these can be housed off-chip and off-rack in a separate cooled system that is coupled to the quantum computer by — no surprise — fiber optics.) These are the advantages of photonic quantum computers: hundreds of qubits integrated onto chips, room-temperature operation, long decoherence times, compatibility with telecom light sources and PICs, compatibility with silicon chip fabrication, universal gates using postselection, and more. Despite the head start of some of the other quantum computing systems, photonics looks like it will be overtaking the others within only a few years to become the dominant technology for the future of quantum computing. And part of that future is being helped along by a new kind of quantum algorithm that is perfectly suited to optics.

A New Kind of Quantum Algorithm: Boson Sampling

In 2011, Scott Aaronson (then at at MIT) published a landmark paper titled “The Computational Complexity of Linear Optics” with his post-doc, Anton Arkhipov [5]. The authors speculated on whether there could be an application of linear optics, not requiring the costly step of post-selection, that was still useful for applications, while simultaneously demonstrating quantum computational advantage. In other words, could one find a linear optical system working with photons that could solve problems intractable to a classical computer? To their own amazement, they did! The answer was something they called “boson sampling”.

To get an idea of what boson sampling is, and why it is very hard to do on a classical computer, think of the classic demonstration of the normal probability distribution found at almost every science museum you visit, illustrated in Fig. 2. A large number of ping-pong balls are dropped one at a time through a forest of regularly-spaced posts, bouncing randomly this way and that until they are collected into bins at the bottom. Bins near the center collect many balls, while bins farther to the side have fewer. If there are many balls, then the stacked heights of the balls in the bins map out a Gaussian probability distribution. The path of a single ping-pong ball represents a series of “decisions” as it hits each post and goes left or right, and the number of permutations of all the possible decisions among all the other ping-pong balls grows exponentially—a hard problem to tackle on a classical computer.

In the paper, Aaronson considered a quantum analog to the ping-pong problem in which the ping-pong balls are replaced by photons, and the posts are replaced by beam splitters. As its simplest possible implementation, it could have two photon channels incident on a single beam splitter. The well-known result in this case is the “HOM dip” [6] which is a consequence of the boson statistics of the photon. Now scale this system up to many channels and a cascade of beam splitters, and one has an N-channel multi-photon HOM cascade. The output of this photonic “circuit” is a sampling of the vast number of permutations allowed by bose statistics—boson sampling.

To make the problem more interesting, Aaronson allowed the photons to be launched from any channel at the top (as opposed to dropping all the ping-pong balls at the same spot), and they allowed each beam splitter to have adjustable phases (photons and phases are the key elements of an interferometer). By adjusting the locations of the photon channels and the phases of the beam splitters, it would be possible to “program” this boson cascade to mimic interesting quantum systems or even to solve specific problems, although they were not thinking that far ahead. The main point of the paper was the proposal that implementing boson sampling in a photonic circuit used resources that scaled linearly in the number of photon channels, while the problems that could be solved grew exponentially—a clear quantum computational advantage [4].

On the other hand, it turned out that boson sampling is not universal—one cannot construct a universal quantum computer out of boson sampling. The first proposal was a specialty algorithm whose main function was to demonstrate quantum computational advantage rather than do something specifically useful—just like Deutsch’s first algorithm. But just like Deutsch’s algorithm, which led ultimately to Shor’s very useful prime factoring algorithm, boson sampling turned out to be the start of a new wave of quantum applications.

Shortly after the publication of Aaronson’s and Arkhipov’s paper in 2011, there was a flurry of experimental papers demonstrating boson sampling in the laboratory [7, 8]. And it was discovered that boson sampling could solve important and useful problems, such as the energy levels of quantum systems, and network similarity, as well as quantum random-walk problems. Therefore, even though boson sampling is not strictly universal, it solves a broad class of problems. It can be viewed more like a specialty chip than a universal computer, like the now-ubiquitous GPU’s are specialty chips in virtually every desktop and laptop computer today. And the room-temperature operation significantly reduces cost, so you don’t need a whole government agency to afford one. Just like CPU costs followed Moore’s Law to the point where a Raspberry Pi computer costs $40 today, the photonic chips may get onto their own Moore’s Law that will reduce costs over the next several decades until they are common (but still specialty and probably not cheap) computers in academia and industry. A first step along that path was a recently-demonstrated general programmable room-temperature photonic quantum computer.

A Programmable Photonic Quantum Computer: Xanadu’s X8 Chip

I don’t usually talk about specific companies, but the new photonic quantum computer chip from Xanadu, based in Toronto, Canada, feels to me like the start of something big. In the March 4, 2021 issue of Nature magazine, researchers at the company published the experimental results of their X8 photonic chip [3]. The chip uses boson sampling of strongly non-classical light. This was the first generally programmable photonic quantum computing chip, programmed using a quantum programming language they developed called Strawberry Fields. By simply changing the quantum code (using a simple conventional computer interface), they switched the computer output among three different quantum applications: transitions among states (spectra of molecular states), quantum docking, and similarity between graphs that represent two different molecules. These are radically different physics and math problems, yet the single chip can be programmed on the fly to solve each one.

The chip is constructed of nitride waveguides on silicon, shown in Fig. 6. The input lasers drive ring oscillators that produce squeezed states through four-wave mixing. The key to the reprogrammability of the chip is the set of phase modulators that use simple thermal changes on the waveguides. These phase modulators are changed in response to commands from the software to reconfigure the application. Although they switch slowly, once they are set to their new configuration, the computations take place “at the speed of light”. The photonic chip is at room temperature, but the outputs of the four channels are sent by fiber optic to a cooled unit containing the superconductor nanowire photon counters.

Admittedly, the four channels of the X8 chip are not large enough to solve the kinds of problems that would require a quantum computer, but the company has plans to scale the chip up to 100 channels. One of the challenges is to reduce the amount of photon loss in a multiplexed chip, but standard silicon fabrication approaches are expected to reduce loss in the next generation chips by an order of magnitude.

Additional companies are also in the process of entering the photonic quantum computing business, such as PsiQuantum, which recently closed a $450M funding round to produce photonic quantum chips with a million qubits. The company is led by Jeremy O’Brien from Bristol University who has been a leader in photonic quantum computing for over a decade.

Stay tuned!

By David D. Nolte, Dec. 20, 2021

Further Reading

• David D. Nolte, “Interference: A History of Interferometry and the Scientists who Tamed Light” (Oxford University Press, to be published in 2023)

• J. L. O’Brien, A. Furusawa, and J. Vuckovic, “Photonic quantum technologies,” Nature Photonics, Review vol. 3, no. 12, pp. 687-695, Dec (2009)

• T. C. Ralph and G. J. Pryde, “Optical Quantum Computation,” in Progress in Optics, Vol 54, vol. 54, E. Wolf Ed., (2010), pp. 209-269.

• S. Barz, “Quantum computing with photons: introduction to the circuit model, the one-way quantum computer, and the fundamental principles of photonic experiments,” (in English), Journal of Physics B-Atomic Molecular and Optical Physics, Article vol. 48, no. 8, p. 25, Apr (2015), Art no. 083001

References

[1] E. Knill, R. Laflamme, and G. J. Milburn, “A scheme for efficient quantum computation with linear optics,” Nature, vol. 409, no. 6816, pp. 46-52, Jan (2001)

[2] J. Carolan, J. L. O’Brien et al, “Universal linear optics,” Science, vol. 349, no. 6249, pp. 711-716, Aug (2015)

[3] J. M. Arrazola, et al, “Quantum circuits with many photons on a programmable nanophotonic chip,” Nature, vol. 591, no. 7848, pp. 54-+, Mar (2021)

[4] H.-S. Zhong J.-W. Pan et al, “Quantum computational advantage using photons,” Science, vol. 370, no. 6523, p. 1460, (2020)

[5] S. Aaronson and A. Arkhipov, “The Computational Complexity of Linear Optics,” in 43rd ACM Symposium on Theory of Computing, San Jose, CA, Jun 06-08 2011, NEW YORK: Assoc Computing Machinery, in Annual ACM Symposium on Theory of Computing, 2011, pp. 333-342

[6] C. K. Hong, Z. Y. Ou, and L. Mandel, “Measurement of subpicosecond time intervals between 2 photons by interference,” Physical Review Letters, vol. 59, no. 18, pp. 2044-2046, Nov (1987)

[7] J. B. Spring, I. A. Walmsley et al, “Boson Sampling on a Photonic Chip,” Science, vol. 339, no. 6121, pp. 798-801, Feb (2013)

[8] M. A. Broome, A. Fedrizzi, S. Rahimi-Keshari, J. Dove, S. Aaronson, T. C. Ralph, and A. G. White, “Photonic Boson Sampling in a Tunable Circuit,” Science, vol. 339, no. 6121, pp. 794-798, Feb (2013)

[…] Twenty Years at Light Speed: The Future of Photonic Quantum Computing […]

LikeLike

[…] Twenty Years at Light Speed: The Future of Photonic Quantum Computing […]

LikeLike

[…] Twenty Years at Light Speed: The Future of Photonic Quantum Computing […]

LikeLike