Light is one of the most powerful manifestations of the forces of physics because it tells us about our reality. The interference of light, in particular, has led to the detection of exoplanets orbiting distant stars, discovery of the first gravitational waves, capture of images of black holes and much more. The stories behind the history of light and interference go to the heart of how scientists do what they do and what they often have to overcome to do it. These time-lines are organized along the chapter titles of the book Interference. They follow the path of theories of light from the first wave-particle debate, through the personal firestorms of Albert Michelson, to the discoveries of the present day in quantum information sciences.

- Thomas Young Polymath: The Law of Interference

- The Fresnel Connection: Particles versus Waves

- At Light Speed: The Birth of Interferometry

- After the Gold Rush: The Trials of Albert Michelson

- Stellar Interference: Measuring the Stars

- Across the Universe: Exoplanets, Black Holes and Gravitational Waves

- Two Faces of Microscopy: Diffraction and Interference

- Holographic Dreams of Princess Leia: Crossing Beams

- Photon Interference: The Foundations of Quantum Communication

- The Quantum Advantage: Interferometric Computing

1. Thomas Young Polymath: The Law of Interference

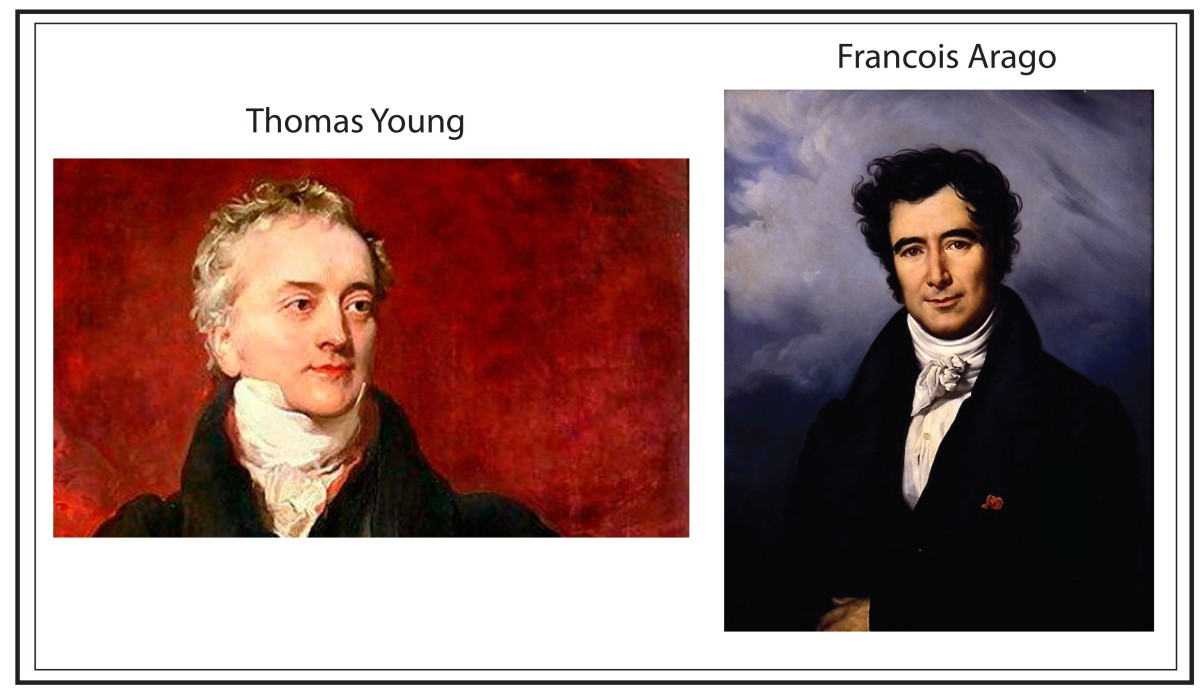

Thomas Young was the ultimate dabbler, his interests and explorations ranged far and wide, from ancient egyptology to naval engineering, from physiology of perception to the physics of sound and light. Yet unlike most dabblers who accomplish little, he made original and seminal contributions to all these fields. Some have called him the “Last Man Who Knew Everything“.

Topics: The Law of Interference. The Rosetta Stone. Benjamin Thompson, Count Rumford. Royal Society. Christiaan Huygens. Pendulum Clocks. Icelandic Spar. Huygens’ Principle. Stellar Aberration. Speed of Light. Double-slit Experiment.

1629 – Huygens born (1629 – 1695)

1642 – Galileo dies, Newton born (1642 – 1727)

1655 – Huygens ring of Saturn

1657 – Huygens patents the pendulum clock

1666 – Newton prismatic colors

1666 – Huygens moves to Paris

1669 – Bartholin double refraction in Icelandic spar

1670 – Bartholinus polarization of light by crystals

1671 – Expedition to Hven by Picard and Rømer

1673 – James Gregory bird-feather diffraction grating

1673 – Huygens publishes Horologium Oscillatorium

1675 – Rømer finite speed of light

1678 – Huygens and two crystals of Icelandic spar

1681 – Huygens returns to the Hague

1689 – Huyens meets Newton

1690 – Huygens Traite de la Lumiere

1695 – Huygens dies

1704 – Newton’s Opticks

1727 – Bradley abberation of starlight

1746 – Euler Nova theoria lucis et colorum

1773 – Thomas Young born

1786 – François Arago born (1786 – 1853)

1787 – Joseph Fraunhofer born (1787 – 1826)

1788 – Fresnel born in Broglie, Normandy (1788 – 1827)

1794 – École Polytechnique founded in Paris by Lazar Carnot and Gaspard Monge, Malus enters the Ecole

1794 – Young elected member of the Royal Society

1794 – Young enters Edinburg (cannot attend British schools because he was Quaker)

1795 – Young enters Göttingen

1796 – Young receives doctor of medicine, grand tour of Germany

1797 – Young returns to England, enters Emmanual College (converted to Church of England)

1798 – The Directory approves Napoleon’s Egyptian campaign, Battle of the Pyramids, Battle of the Nile

1799 – Young graduates from Cambridge

1799 – Royal Institution founded

1799 – Young Outlines

1800 – Young Sound and Light read to Royal Society,

1800 – Young Mechanisms of the Eye (Bakerian Lecture of the Royal Society)

1801 – Young Theory of Light and Colours, three color mechanism (Bakerian Lecture), Young considers interference to cause the colored films, first estimates of the wavelengths of different colors

1802 – Young begins series of lecturs at the Royal Institution (Jan. 1802 – July 1803)

1802 – Young names the principle (Law) of interference

1803 – Young’s 3rd Bakerian Lecture, November. Experiments and Calculations Relative Physical to Optics, The Law of Interference

1807 – Young publishes A course of lectures on Natural Philosophy and the Mechanical Arts, based on Royal Institution lectures, two-slit experiment described

1808 – Malus polarization

1811 – Young appointed to St. Georges hospital

1813 – Young begins work on Rosetta stone

1814 – Young translates the demotic script on the stone

1816 – Arago visits Young

1818 – Young’s Encyclopedia article on Egypt

1822 – Champollion publishes translation of hieroglyphics

1827 – Young elected foreign member of the Institute of Paris

1829 – Young dies

2. The Fresnel Connection: Particles versus Waves

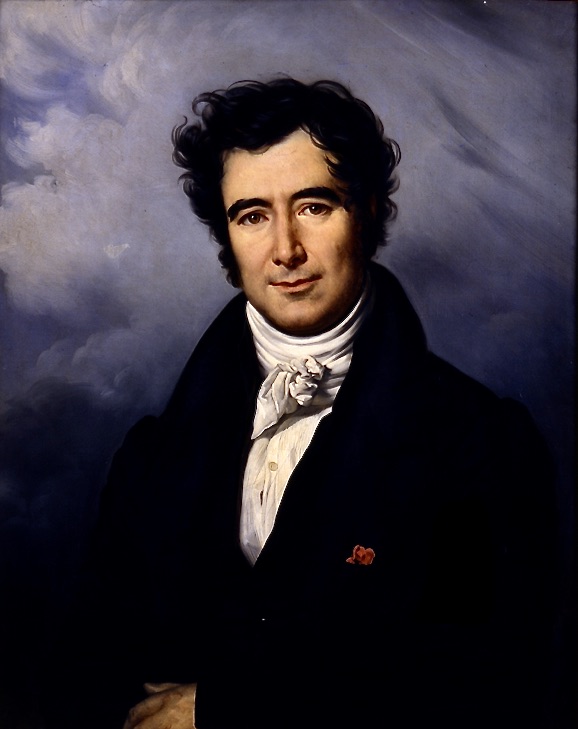

Augustin Fresnel was an intuitive genius whose talents were almost squandered on his job building roads and bridges in the backwaters of France until he was discovered and rescued by Francois Arago.

Topics: Particles versus Waves. Malus and Polarization. Agustin Fresnel. Francois Arago. Diffraction. Daniel Bernoulli. The Principle of Superposition. Joseph Fourier. Transverse Light Waves.

1665 – Grimaldi diffraction bands outside shadow

1673 – James Gregory bird-feather diffraction grating

1675 – Römer finite speed of light

1704 – Newton’s Optics

1727 – Bradley abberation of starlight

1774 – Jean-Baptiste Biot born

1786 – David Rittenhouse hairs-on-screws diffraction grating

1786 – François Arago born (1786 – 1853)

1787 – Fraunhofer born (1787 – 1826)

1788 – Fresnel born in Broglie, Normandy (1788 – 1827)

1790 – Fresnel moved to Cherbourg

1794 – École Polytechnique founded in Paris by Lazar Carnot and Gaspard Monge

1804 – Fresnel attends Ecole polytechnique in Paris at age 16

1806 – Fresnel graduated and attended the national school of bridges and highways

1808 – Malus polarization

1809 – Fresnel graduated from Les Ponts

1809 – Arago returns from captivity in Algiers

1811 – Arago publishes paper on particle theory of light

1811 – Arago optical ratotory activity (rotation)

1814 – Fraunhofer spectroscope (solar absorption lines)

1815 – Fresnel meets Arago in Paris on way home to Mathieu (for house arrest)

1815 – Fresnel first paper on wave properties of diffraction

1816 – Fresnel returns to Paris to demonstrate his experiments

1816 – Arago visits Young

1816 – Fresnel paper on interference as origin of diffraction

1817 – French Academy announces its annual prize competition: topic of diffraction

1817 – Fresnel invents and uses his “Fresnel Integrals”

1819 – Fresnel awarded French Academy prize for wave theory of diffraction

1819 – Arago and Fresnel transverse and circular (?) polarization

1821 – Fraunhofer diffraction grating

1821 – Fresnel light is ONLY transverse

1821 – Fresnel double refraction explanation

1823 – Fraunhofer 3200 lines per Paris inch

1826 – Publication of Fresnel’s award memoire

1827 – Death of Fresnel by tuberculosis

1840 – Ernst Abbe born (1840 – 1905)

1849 – Stokes distribution of secondary waves

1850 – Fizeau and Foucault speed of light experiments

3. At Light Speed

There is no question that Francois Arago was a swashbuckler. His life’s story reads like an adventure novel as he went from being marooned in hostile lands early in his career to becoming prime minister of France after the 1848 revolutions swept across Europe.

Topics: The Birth of Interferometry. Snell’s Law. Fresnel and Arago. The First Interferometer. Fizeau and Foucault. The Speed of Light. Ether Drag. Jamin Interferometer.

1671 – Expedition to Hven by Picard and Rømer

1704 – Newton’s Opticks

1729 – James Bradley observation of stellar aberration

1784 – John Michel dark stars

1804 – Young wave theory of light and ether

1808 – Malus discovery of polarization of reflected light

1810 – Arago search for ether drag

1813 – Fraunhofer dark lines in Sun spectrum

1819 – Fresnel’s double mirror

1820 – Oersted discovers electromagnetism

1821 – Faraday electromagnetic phenomena

1821 – Fresnel light purely transverse

1823 – Fresnel reflection and refraction based on boundary conditions of ether

1827 – Green mathematical analysis of electricity and magnetism

1830 – Cauchy ether as elastic solid

1831 – Faraday electromagnetic induction

1831 – Cauchy ether drag

1831 – Maxwell born

1831 – Faraday electromagnetic induction

1834 – Lloyd’s mirror

1836 – Cauchy’s second theory of the ether

1838 – Green theory of the ether

1839 – Hamilton group velocity

1839 – MacCullagh properties of rotational ether

1839 – Cauchy ether with negative compressibility

1841 – Maxwell entered Edinburgh Academy (age 10) met P. G. Tait

1842 – Doppler effect

1845 – Faraday effect (magneto-optic rotation)

1846 – Haidinger fringes

1846 – Stokes’ viscoelastic theory of the ether

1847 – Maxwell entered Edinburgh University

1848 – Fizeau proposal of the Fizeau-Doppler effect

1849 – Fizeau speed of light

1850 – Maxwell at Cambridge, studied under Hopkins, also knew Stokes and Whewell

1852 – Michelson born Strelno, Prussia

1854 – Maxwell wins the Smith’s Prize (Stokes’ theorem was one of the problems)

1855 – Michelson’s immigrate to San Francisco through Panama Canal

1855 – Maxwell “On Faraday’s Line of Force”

1856 – Jamin interferometer

1856 – Thomson magneto-optics effects (of Faraday)

1857 – Clausius constructs kinetic theory, Mean molecular speeds

1859 – Fizeau light in moving medium

1862 – Fizeau fringes

1865 – Maxwell “A Dynamical Theory of the Electromagnetic Field”

1867 – Thomson and Tait “Treatise on Natural Philosophy”

1867 – Thomson hydrodynamic vortex atom

1868 – Fizeau proposal for stellar interferometry

1870 – Maxwell introduced “curl”, “convergence” and “gradient”

1871 – Maxwell appointed to Cambridge

1873 – Maxwell “A Treatise on Electricity and Magnetism”

4. After the Gold Rush

No name is more closely connected to interferometry than that of Albert Michelson. He succeeded, sometimes at great personal cost, in launching interferometric metrology as one of the most important tools used by scientists today.

Topics: The Trials of Albert Michelson. Hermann von Helmholtz. Michelson and Morley. Fabry and Perot.

1810 – Arago search for ether drag

1813 – Fraunhofer dark lines in Sun spectrum

1813 – Faraday begins at Royal Institution

1820 – Oersted discovers electromagnetism

1821 – Faraday electromagnetic phenomena

1827 – Green mathematical analysis of electricity and magnetism

1830 – Cauchy ether as elastic solid

1831 – Faraday electromagnetic induction

1831 – Cauchy ether drag

1831 – Maxwell born

1831 – Faraday electromagnetic induction

1836 – Cauchy’s second theory of the ether

1838 – Green theory of the ether

1839 – Hamilton group velocity

1839 – MacCullagh properties of rotational ether

1839 – Cauchy ether with negative compressibility

1841 – Maxwell entered Edinburgh Academy (age 10) met P. G. Tait

1842 – Doppler effect

1845 – Faraday effect (magneto-optic rotation)

1846 – Stokes’ viscoelastic theory of the ether

1847 – Maxwell entered Edinburgh University

1850 – Maxwell at Cambridge, studied under Hopkins, also knew Stokes and Whewell

1852 – Michelson born Strelno, Prussia

1854 – Maxwell wins the Smith’s Prize (Stokes’ theorem was one of the problems)

1855 – Michelson’s immigrate to San Francisco through Panama Canal

1855 – Maxwell “On Faraday’s Line of Force”

1856 – Jamin interferometer

1856 – Thomson magneto-optics effects (of Faraday)

1859 – Fizeau light in moving medium

1859 – Discovery of the Comstock Lode

1860 – Maxwell publishes first paper on kinetic theory.

1861 – Maxwell “On Physical Lines of Force” speed of EM waves and molecular vortices, molecular vortex model

1862 – Michelson at boarding school in SF

1865 – Maxwell “A Dynamical Theory of the Electromagnetic Field”

1867 – Thomson and Tait “Treatise on Natural Philosophy”

1867 – Thomson hydrodynamic vortex atom

1868 – Fizeau proposal for stellar interferometry

1869 – Michelson meets US Grant and obtained appointment to Annapolis

1870 – Maxwell introduced “curl”, “convergence” and “gradient”

1871 – Maxwell appointed to Cambridge

1873 – Big Bonanza at the Consolidated Virginia mine

1873 – Maxwell “A Treatise on Electricity and Magnetism”

1873 – Michelson graduates from Annapolis

1875 – Michelson instructor at Annapolis

1877 – Michelson married Margaret Hemingway

1878 – Michelson First measurement of the speed of light with funds from father in law

1879 – Michelson Begin collaborating with Newcomb

1879 – Maxwell proposes second-order effect for ether drift experiments

1879 – Maxwell dies

1880 – Michelson Idea for second-order measurement of relative motion against ether

1880 – Michelson studies in Europe with Helmholtz in Berlin

1881 – Michelson Measurement at Potsdam with funds from Alexander Graham Bell

1882 – Michelson in Paris, Cornu, Mascart and Lippman

1882 – Michelson Joined Case School of Applied Science

1884 – Poynting energy flux vector

1885 – Michelson Began collaboration with Edward Morley of Western Reserve

1885 – Lorentz points out inconsistency of Stokes’ ether model

1885 – Fitzgerald wheel and band model, vortex sponge

1886 – Michelson and Morley repeat the Fizeau moving water experiment

1887 – Michelson Five days in July experiment on motion relative to ether

1887 – Michelson-Morley experiment published

1887 – Voigt derivation of relativistic Doppler (with coordinate transformations)

1888 – Hertz generation and detection of radio waves

1889 – Michelson moved to Clark University at Worcester

1889 – Fitzgerald contraction

1889 – Lodge cogwheel model of electromagnetism

1890 – Michelson Proposed use of interferometry in astronomy

1890 – Thomson devises a mechanical model of MacCullagh’s rotational ether

1890 – Hertz Galileo relativity and ether drag

1891 – Mach-Zehnder

1891 – Michelson measures diameter of Jupiter’s moons with interferometry

1891 – Thomson vortex electromagnetism

1892 – 1893 Michelson measurement of the Paris meter

1893 – Sirks interferometer

1893 – Michelson moved to University of Chicago to head Physics Dept.

1893 – Lorentz contraction

1894 – Lodge primitive radio demonstration

1895 – Marconi radio

1896 – Rayleigh’s interferometer

1897 – Lodge no ether drag on laboratory scale

1898 – Pringsheim interferometer

1899 – Fabry-Perot interferometer

1899 – Michelson remarried

1901 – 1903 Michelson President of the APS

1905 – Poincaré names the Lorentz transformations

1905 – Einstein’s special theory of Relativity

1907 – Michelson Nobel Prize

1913 – Sagnac interferometer

1916 – Twyman-Green interferometer

1920 – Stellar interferometer on the Hooker 100-inch telescope (Betelgeuse)

1923 – 1927 Michelson presided over the National Academy of Sciences

1931 – Michelson dies

5. Stellar Interference

Learning from his attempts to measure the speed of light through the ether, Michelson realized that the partial coherence of light from astronomical sources could be used to measure their sizes. His first measurements using the Michelson Stellar Interferometer launched a major subfield of astronomy that is one of the most active today.

Topics: Measuring the Stars. Astrometry. Moons of Jupiter. Schwarzschild. Betelgeuse. Michelson Stellar Interferometer. Banbury Brown Twiss. Sirius. Adaptive Optics.

1838 – Bessel stellar parallax measurement with Fraunhofer telescope

1868 – Fizeau proposes stellar interferometry

1873 – Stephan implements Fizeau’s stellar interferometer on Sirius, sees fringes

1880 – Michelson Idea for second-order measurement of relative motion against ether

1880 – 1882 Michelson Studies in Europe (Helmholtz in Berlin, Quincke in Heidelberg, Cornu, Mascart and Lippman in Paris)

1881 – Michelson Measurement at Potsdam with funds from Alexander Graham Bell

1881 – Michelson Resigned from active duty in the Navy

1883 – Michelson Joined Case School of Applied Science

1889 – Michelson moved to Clark University at Worcester

1890 – Michelson develops mathematics of stellar interferometry

1891 – Michelson measures diameters of Jupiter’s moons

1893 – Michelson moves to University of Chicago to head Physics Dept.

1896 – Schwarzschild double star interferometry

1907 – Michelson Nobel Prize

1908 – Hale uses Zeeman effect to measure sunspot magnetism

1910 – Taylor single-photon double slit experiment

1915 – Proxima Centauri discovered by Robert Innes

1916 – Einstein predicts gravitational waves

1920 – Stellar interferometer on the Hooker 100-inch telescope (Betelgeuse)

1947 – McCready sea interferometer observes rising sun (first fringes in radio astronomy

1952 – Ryle radio astronomy long baseline

1954 – Hanbury-Brown and Twiss radio intensity interferometry

1956 – Hanbury-Brown and Twiss optical intensity correlation, Sirius (optical)

1958 – Jennison closure phase

1970 – Labeyrie speckle interferometry

1974 – Long-baseline radio interferometry in practice using closure phase

1974 – Johnson, Betz and Townes: IR long baseline

1975 – Labeyrie optical long-baseline

1982 – Fringe measurements at 2.2 microns Di Benedetto

1985 – Baldwin closure phase at optical wavelengths

1991 – Coude du Foresto single-mode fibers with separated telescopes

1993 – Nobel prize to Hulse and Taylor for binary pulsar

1995 – Baldwin optical synthesis imaging with separated telescopes

1991 – Mayor and Queloz Doppler pull of 51 Pegasi

1999 – Upsilon Andromedae multiple planets

2009 – Kepler space telescope launched

2014 – Kepler announces 715 planets

2015 – Kepler-452b Earthlike planet in habitable zone

2015 – First detection of gravitational waves

2016 – Proxima Centauri b exoplanet confirmed

2017 – Nobel prize for gravitational waves

2018 – TESS (Transiting Exoplanet Survey Satellite)

2019 – Mayor and Queloz win Nobel prize for first exoplanet

2019 – First direct observation of exoplanet using interferometry

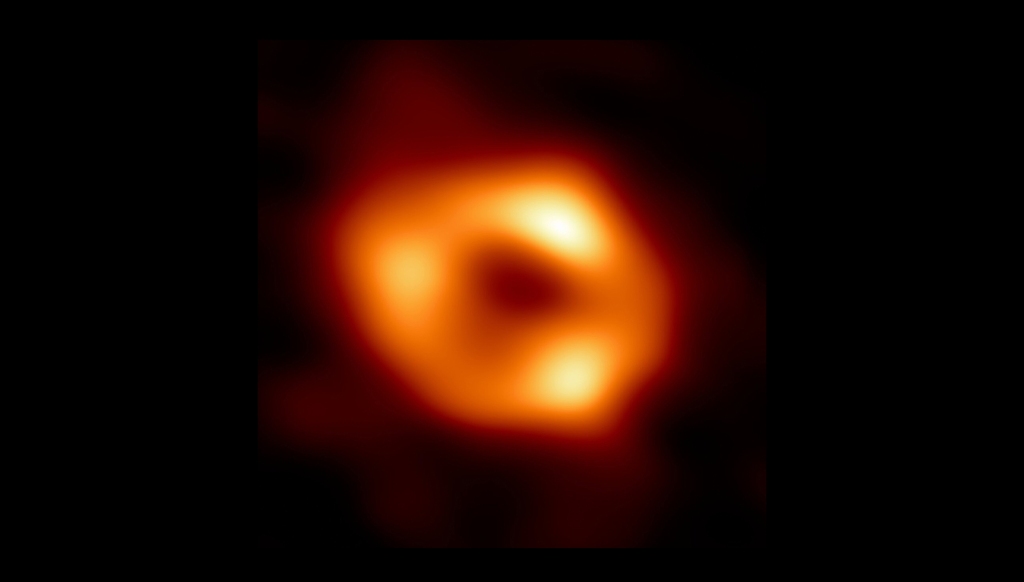

2019 – First image of a black hole obtained by very-long-baseline interferometry

6. Across the Universe

Stellar interferometry is opening new vistas of astronomy, exploring the wildest occupants of our universe, from colliding black holes half-way across the universe (LIGO) to images of neighboring black holes (EHT) to exoplanets near Earth that may harbor life.

Topics: Gravitational Waves, Black Holes and the Search for Exoplanets. Nulling Interferometer. Event Horizon Telescope. M87 Black Hole. Long Baseline Interferometry. LIGO.

1947 – Virgo A radio source identified as M87

1953 – Horace W. Babcock proposes adaptive optics (AO)

1958 – Jennison closure phase

1967 – First very long baseline radio interferometers (from meters to hundreds of km to thousands of km within a single year)

1967 – Ranier Weiss begins first prototype gravitational wave interferometer

1967 – Virgo X-1 x-ray source (M87 galaxy)

1970 – Poul Anderson’s Tau Zero alludes to AO in science fiction novel

1973 – DARPA launches adaptive optics research with contract to Itek, Inc.

1974 – Wyant (Itek) white-light shearing interferometer

1974 – Long-baseline radio interferometry in practice using closure phase

1975 – Hardy (Itek) patent for adaptive optical system

1975 – Weiss funded by NSF to develop interferometer for GW detection

1977 – Demonstration of AO on Sirius (Bell Labs and Berkeley)

1980 – Very Large Array (VLA) 6 mm to 4 meter wavelengths

1981 – Feinleib proposes atmospheric laser backscatter

1982 – Will Happer at Princeton proposes sodium guide star

1982 – Fringe measurements at 2.2 microns (Di Benedetto)

1983 – Sandia Optical Range demonstrates artificial guide star (Rayleigh)

1983 – Strategic Defense Initiative (Star Wars)

1984 – Lincoln labs sodium guide star demo

1984 – ESO plans AO for Very Large Telescope (VLT)

1985 – Laser guide star (Labeyrie)

1985 – Closure phase at optical wavelengths (Baldwin)

1988 – AFWL names Starfire Optical Range, Kirtland AFB outside Albuquerque

1988 – Air Force Maui Optical Site Schack-Hartmann and 241 actuators (Itek)

1988 – First funding for LIGO feasibility

1989 – 19-element-mirror Double star on 1.5m telescope in France

1989 – VLT approved for construction

1990 – Launch of the Hubble Space Telescope

1991 – Single-mode fibers with separated telescopes (Coude du Foresto)

1992 – ADONIS

1992 – NSF requests declassification of AO

1993 – VLBA (Very Long Baseline Array) 8,611 km baseline 3 mm to 90 cm

1994 – Declassification completed

1994 – Curvature sensor 3.6m Canada-France-Hawaii

1994 – LIGO funded by NSF, Barish becomes project director

1995 – Optical synthesis imaging with separated telescopes (Baldwin)

1995 – Doppler pull of 51 Pegasi (Mayor and Queloz)

1998 – ESO VLT first light

1998 – Keck installed with Schack-Hartmann

1999 – Upsilon Andromedae multiple planets

2000 – Hale 5m Palomar Schack-Hartmann

2001 – NAOS-VLT adaptive optics

2001 – VLTI first light (MIDI two units)

2002 – LIGO operation begins

2007 – VLT laser guide star

2007 – VLTI AMBER first scientific results (3 units)

2009 – Kepler space telescope launched

2009 – Event Horizon Telescope (EHT) project starts

2010 – Large Binocular Telescope (LBT) 672 actuators on secondary mirror

2010 – End of first LIGO run. No events detected. Begin Enhanced LIGO upgrade.

2011 – SPHERE-VLT 41×41 actuators (1681)

2012 – Extremely Large Telescope (ELT) approved for construction

2014 – Kepler announces 715 planets

2015 – Kepler-452b Earthlike planet in habitable zone

2015 – First detection of gravitational waves (LIGO)

2015 – LISA Pathfinder launched

2016 – Second detection at LIGO

2016 – Proxima Centauri b exoplanet confirmed

2016 – GRAVITY VLTI (4 units)

2017 – Nobel prize for gravitational waves

2018 – TESS (Transiting Exoplanet Survey Satellite) launched

2018 – MATTISE VLTI first light (combining all units)

2019 – Mayor and Queloz win Nobel prize

2019 – First direct observation of exoplanet using interferometry at LVTI

2019 – First image of a black hole obtained by very-long-baseline interferometry (EHT)

2020 – First neutron-star black-hole merger detected

2020 – KAGRA (Japan) online

2024 – LIGO India to go online

2025 – First light for ELT

2034 – Launch date for LISA

7. Two Faces of Microscopy

From the astronomically large dimensions of outer space to the microscopically small dimensions of inner space, optical interference pushes the resolution limits of imaging.

Topics: Diffraction and Interference. Joseph Fraunhofer. Diffraction Gratings. Henry Rowland. Carl Zeiss. Ernst Abbe. Phase-contrast Microscopy. Super-resolution Micrscopes. Structured Illumination.

1021 – Al Hazeni manuscript on Optics

1284 – First eye glasses by Salvino D’Armate

1590 – Janssen first microscope

1609 – Galileo first compound microscope

1625 – Giovanni Faber coins phrase “microscope”

1665 – Hook’s Micrographia

1676 – Antonie van Leeuwenhoek microscope

1787 – Fraunhofer born

1811 – Fraunhofer enters business partnership with Utzschneider

1816 – Carl Zeiss born

1821 – Fraunhofer first diffraction publication

1823 – Fraunhofer second diffraction publication 3200 lines per Paris inch

1830 – Spherical aberration compensated by Joseph Jackson Lister

1840 – Ernst Abbe born

1846 – Zeiss workshop in Jena, Germany

1850 – Fizeau and Foucault speed of light

1851 – Otto Schott born

1859 – Kirchhoff and Bunsen theory of emission and absorption spectra

1866 – Abbe becomes research director at Zeiss

1874 – Ernst Abbe equation on microscope resolution

1874 – Helmholtz image resolution equation

1880 – Rayleigh resolution

1888 – Hertz waves

1888 – Frits Zernike born

1925 – Zsigmondy Nobel Prize for light-sheet microscopy

1931 – Transmission electron microscope by Ruske and Knoll

1932 – Phase contrast microscope by Zernicke

1942 – Scanning electron microscope by Ruska

1949 – Mirau interferometric objective

1952 – Nomarski differential phase contrast microscope

1953 – Zernicke Nobel prize

1955 – First discussion of superresolution by Toraldo di Francia

1957 – Marvin Minsky patents confocal principle

1962 – Green flurescence protein (GFP) Shimomura, Johnson and Saiga

1966 – Structured illumination microscopy by Lukosz

1972 – CAT scan

1978 – Cremer confocal laser scanning microscope

1978 – Lohman interference microscopy

1981 – Binnig and Rohrer scanning tunneling microscope (STM)

1986 – Microscopy Nobel Prize: Ruska, Binnig and Rohrer

1990 – 4PI microscopy by Stefan Hell

1992 – GFP cloned

1993 – STED by Stefan Hell

1993 – Light sheet fluorescence microscopy by Spelman

1995 – Structured illumination microscopy by Guerra

1995 – Gustafsson image interference microscopy

1999 – Gustafsson I5M

2004 – Selective plane illumination microscopy (SPIM)

2006 – PALM and STORM (Betzig and Zhuang)

2014 – Nobel Prize (Hell, Betzig and Moerner)

8. Holographic Dreams of Princess Leia

The coherence of laser light is like a brilliant jewel that sparkles in the darkness, illuminating life, probing science and projecting holograms in virtual worlds.

Topics: Crossing Beams. Denis Gabor. Wavefront Reconstruction. Holography. Emmett Leith. Lasers. Ted Maiman. Charles Townes. Optical Maser. Dynamic Holography. Light-field Imaging.

1900 – Dennis Gabor born

1926 – Hans Busch magnetic electron lens

1927 – Gabor doctorate

1931 – Ruska and Knoll first two-stage electron microscope

1942 – Lawrence Bragg x-ray microscope

1948 – Gabor holography paper in Nature

1949 – Gabor moves to Imperial College

1950 – Lamb possibility of population inversion

1951 – Purcell and Pound demonstration of population inversion

1952 – Leith joins Willow Run Labs

1953 – Townes first MASER

1957 – SAR field trials

1957 – Gould coins LASER

1958 – Schawlow and Townes proposal for optical maser

1959 – Shawanga Lodge conference

1960 – Maiman first laser: pink ruby

1960 – Javan first gas laser: HeNe at 1.15 microns

1961 – Leith and Upatnieks wavefront reconstruction

1962 – HeNe laser in the visible at 632.8 nm

1962 – First laser holograms (Leith and Upatnieks)

1963 – van Heerden optical information storage

1963 – Leith and Upatnieks 3D holography

1966 – Ashkin optically-induced refractive index changes

1966 – Leith holographic information storage in 3D

1968 – Bell Labs holographic storage in Lithium Niobate and Tantalate

1969 – Kogelnik coupled wave theory for thick holograms

1969 – Electrical control of holograms in SBN

1970 – Optically induced refractive index changes in Barium Titanate

1971 – Amodei transport models of photorefractive effect

1971 – Gabor Nobel prize

1972 – Staebler multiple holograms

1974 – Glass and von der Linde photovoltaic and photorefractive effects, UV erase

1977 – Star Wars movie

1981 – Huignard two-wave mixing energy transfer

2012 – Coachella Music Festival

9. Photon Interference

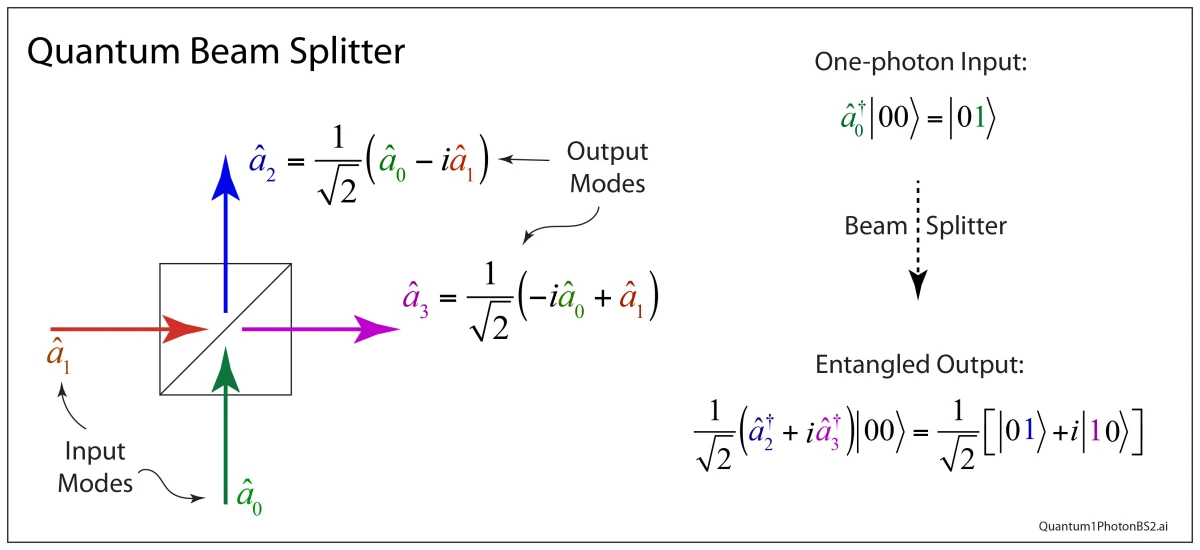

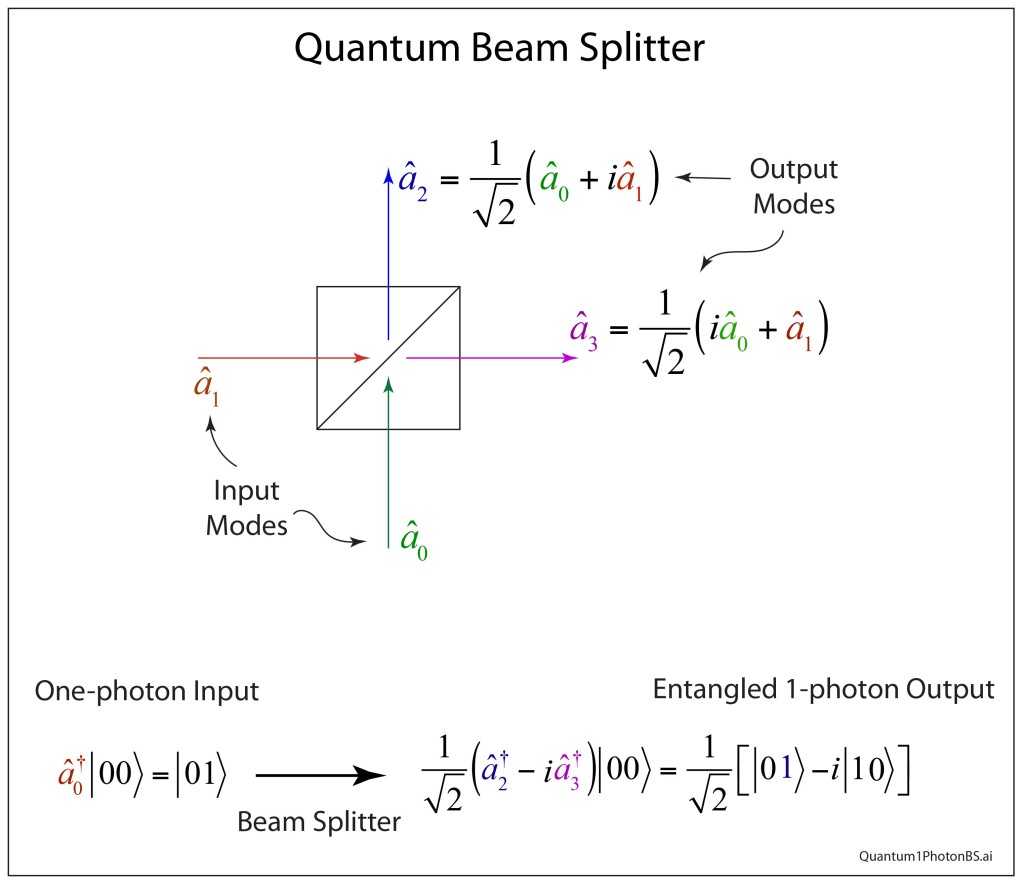

What is the image of one photon interfering? Better yet, what is the image of two photons interfering? The answer to this crucial question laid the foundation for quantum communication.

Topics: The Beginnings of Quantum Communication. EPR paradox. Entanglement. David Bohm. John Bell. The Bell Inequalities. Leonard Mandel. Single-photon Interferometry. HOM Interferometer. Two-photon Fringes. Quantum cryptography. Quantum Teleportation.

1900 – Planck (1901). “Law of energy distribution in normal spectra.” [1]

1905 – A. Einstein (1905). “Generation and conversion of light wrt a heuristic point of view.” [2]

1909 – A. Einstein (1909). “On the current state of radiation problems.” [3]

1909 – Single photon double-slit experiment, G.I. Taylor [4]

1915 – Milliken photoelectric effect

1916 – Einstein predicts stimulated emission

1923 –Compton, Arthur H. (May 1923). Quantum Theory of the Scattering of X-Rays.[5]

1926 – Gilbert Lewis names “photon”

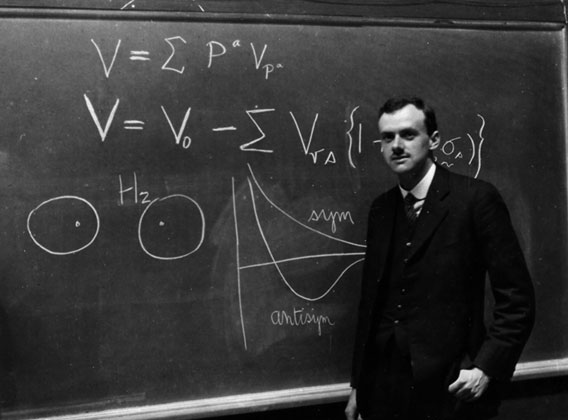

1926 – Dirac: photons interfere only with themselves

1927 – D. Dirac, P. A. M. (1927). Emission and absorption of radiation [6]

1932 – von Neumann textbook on quantum physics

1932 – E. P. Wigner: Phys. Rev. 40, 749 (1932)

1935 – EPR paper, A. Einstein, B. Podolsky, N. Rosen: Phys. Rev. 47 , 777 (1935)

1935 – Reply to EPR, N. Bohr: Phys. Rev. 48 , 696 (1935)

1935 – Schrödinger (1935 and 1936) on entanglement (cat?) “Present situation in QM”

1948 – Gabor holography

1950 – Wu correlated spin generation from particle decay

1951 – Bohm alternative form of EPR gedankenexperiment (quantum textbook)

1952 – Bohm nonlocal hidden variable theory[7]

1953 – Schwinger: Coherent states

1956 – Photon bunching, R. Hanbury-Brown, R.W. Twiss: Nature 177 , 27 (1956)

1957 – Bohm and Ahronov proof of entanglement in 1950 Wu experiment

1959 – Ahronov-Bohm effect of magnetic vector potential

1960 – Klauder: Coherent states

1963 – Coherent states, R. J. Glauber: Phys. Rev. 130 , 2529 (1963)

1963 – Coherent states, E. C. G. Sudarshan: Phys. Rev. Lett. 10, 277 (1963)

1964 – J. S. Bell: Bell inequalities [8]

1964 – Mandel professorship at Rochester

1967 – Interference at single photon level, R. F. Pfleegor, L. Mandel: [9]

1967 – M. O. Scully, W.E. Lamb: Phys. Rev. 159 , 208 (1967) Quantum theory of laser

1967 – Parametric converter (Mollow and Glauber) [10]

1967 – Kocher and Commins calcium 2-photon cascade

1969 – Quantum theory of laser, M. Lax, W.H. Louisell: Phys. Rev. 185 , 568 (1969)

1969 – CHSH inequality [11]

1972 – First test of Bell’s inequalities (Freedman and Clauser)

1975 – Carmichel and Walls predicted light in resonance fluorescence from a two-level atom would display photon anti-bunching (1976)

1977 – Photon antibunching in resonance fluorescence. H. J. Kimble, M. Dagenais and L. Mandel [12]

1978 – Kip Thorne quantum non-demolition (QND)

1979 – Hollenhorst squeezing for gravitational wave detection: names squeezing

1982 – Apect Experimental Bell experiments, [13]

1985 – Dick Slusher experimental squeezing

1985 – Deutsch quantum algorithm

1986 – Photon anti-bunching at a beamsplitter, P. Grangier, G. Roger, A. Aspect: [14]

1986 – Kimble squeezing in parametric down-conversion

1986 – C. K. Hong, L. Mandel: Phys. Rev. Lett. 56 , 58 (1986) one-photon localization

1987 – Two-photon interference (Ghosh and Mandel) [15]

1987 – HOM effect [16]

1987 – Photon squeezing, P. Grangier, R. E. Slusher, B. Yurke, A. La Porta: [17]

1987 – Grangier and Slusher, squeezed light interferometer

1988 – 2-photon Bell violation: Z. Y. Ou, L. Mandel: Phys. Rev. Lett. 61 , 50 (1988)

1988 – Brassard Quantum cryptography

1989 – Franson proposes two-photon interference in k-number (?)

1990 – Two-photon interference in k-number (Kwiat and Chiao)

1990 – Two-photon interference (Ou, Zhou, Wang and Mandel)

1993 – Quantum teleportation proposal (Bennett)

1994 – Teleportation of quantum states (Vaidman)

1994 – Shor factoring algorithm

1995 – Down-conversion for polarization: Kwiat and Zeilinger (1995)

1997 – Experimental quantum teleportation (Bouwmeester)

1997 – Experimental quantum teleportation (Bosci)

1998 – Unconditional quantum teleportation (every state) (Furusawa)

2001 – Quantum computing with linear optics (Knill, Laflamme, Milburn)

2013 – LIGO design proposal with squeezed light (Aasi)

2019 – Squeezing upgrade on LIGO (Tse)

2020 – Quantum computational advantage (Zhong)

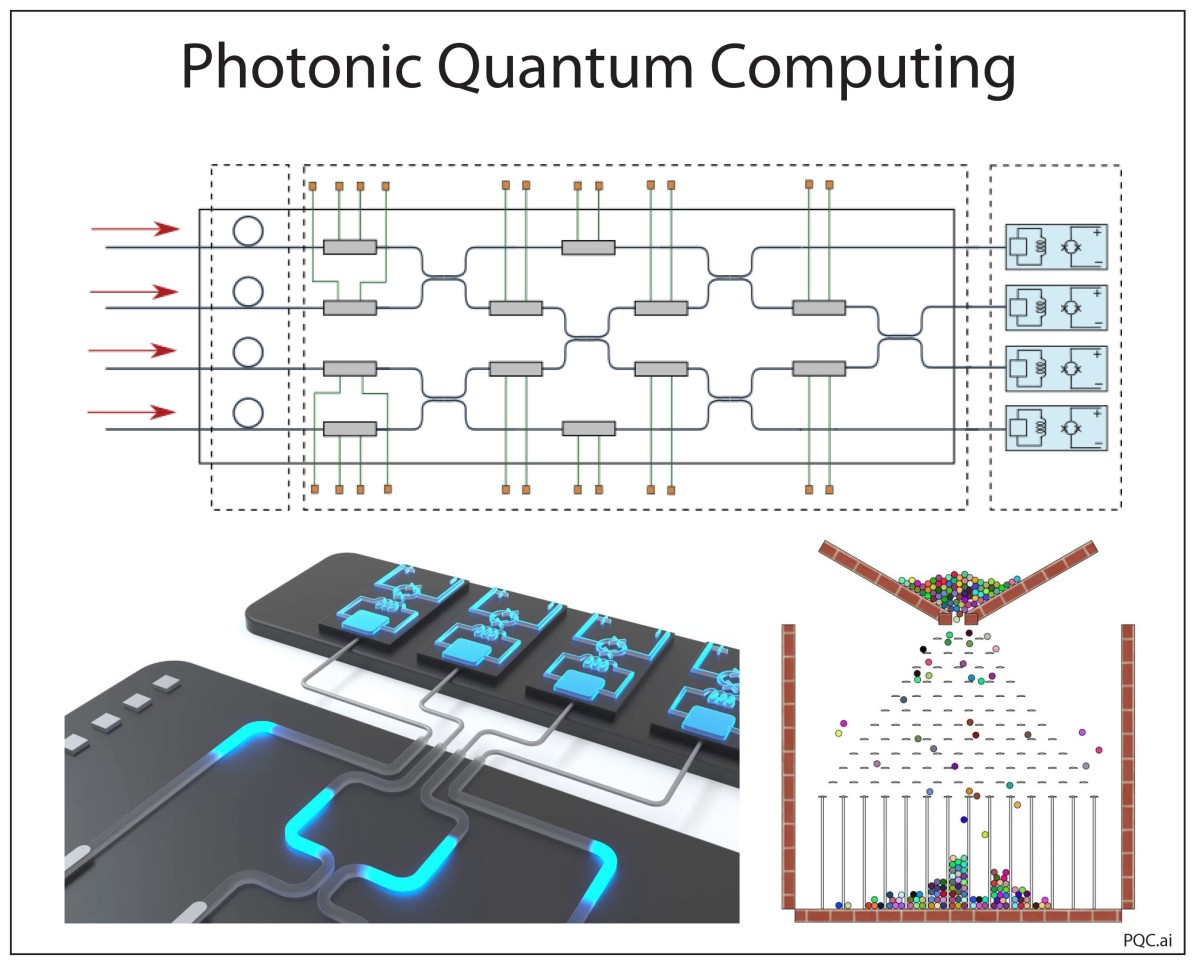

10. The Quantum Advantage

There is almost no technical advantage better than having exponential resources at hand. The exponential resources of quantum interference provide that advantage to quantum computing which is poised to usher in a new era of quantum information science and technology.

Topics: Interferometric Computing. David Deutsch. Quantum Algorithm. Peter Shor. Prime Factorization. Quantum Logic Gates. Linear Optical Quantum Computing. Boson Sampling. Quantum Computational Advantage.

1980 – Paul Benioff describes possibility of quantum computer

1981 – Feynman simulating physics with computers

1985 – Deutsch quantum Turing machine [18]

1987 – Quantum properties of beam splitters

1992 – Deutsch Josza algorithm is exponential faster than classical

1993 – Quantum teleportation described

1994 – Shor factoring algorithm [19]

1994 – First quantum computing conference

1995 – Shor error correction

1995 – Universal gates

1996 – Grover search algorithm

1998 – First demonstration of quantum error correction

1999 – Nakamura and Tsai superconducting qubits

2001 – Superconducting nanowire photon detectors

2001 – Linear optics quantum computing (KLM)

2001 – One-way quantum computer

2003 – All-optical quantum gate in a quantum dot (Li)

2003 – All-optical quantum CNOT gate (O’Brien)

2003 – Decoherence and einselection (Zurek)

2004 – Teleportation across the Danube

2005 – Experimental quantum one-way computing (Walther)

2007 – Teleportation across 114 km (Canary Islands)

2008 – Quantum discord computing

2011 – D-Wave Systems offers commercial quantum computer

2011 – Aaronson boson sampling

2012 – 1QB Information Technnologies, first quantum software company

2013 – Experimental demonstrations of boson sampling

2014 – Teleportation on a chip

2015 – Universal linear optical quantum computing (Carolan)

2017 – Teleportation to a satellite

2019 – Generation of a 2D cluster state (Larsen)

2019 – Quantum supremacy [20]

2020 – Quantum optical advantage [21]

2021 – Programmable quantum photonic chip

References:

[1] Annalen Der Physik 4(3): 553-563.

[2] Annalen Der Physik 17(6): 132-148.

[3] Physikalische Zeitschrift 10: 185-193.

[4] Proc. Cam. Phil. Soc. Math. Phys. Sci. 15 , 114 (1909)

[5] Physical Review. 21 (5): 483–502.

[6] Proceedings of the Royal Society of London Series a-Containing Papers of a Mathematical and Physical Character 114(767): 243-265.

[7] D. Bohm, “A suggested interpretation of the quantum theory in terms of hidden variables .1,” Physical Review, vol. 85, no. 2, pp. 166-179, (1952)

[8] Physics 1 , 195 (1964); Rev. Mod. Phys. 38 , 447 (1966)

[9] Phys. Rev. 159 , 1084 (1967)

[10] B. R. Mollow, R. J. Glauber: Phys. Rev. 160, 1097 (1967); 162, 1256 (1967)

[11] J. F. Clauser, M. A. Horne, A. Shimony, and R. A. Holt, ” Proposed experiment to test local hidden-variable theories,” Physical Review Letters, vol. 23, no. 15, pp. 880-&, (1969)

[12] (1977) Phys. Rev. Lett. 39, 691-5

[13] A. Aspect, P. Grangier, G. Roger: Phys. Rev. Lett. 49 , 91 (1982). A. Aspect, J. Dalibard, G. Roger: Phys. Rev. Lett. 49 , 1804 (1982)

[14] Europhys. Lett. 1 , 173 (1986)

[15] R. Ghosh and L. Mandel, “Observation of nonclassical effects in the interference of 2 photons,” Physical Review Letters, vol. 59, no. 17, pp. 1903-1905, Oct (1987)

[16] C. K. Hong, Z. Y. Ou, and L. Mandel, “Measurement of subpicosecond time intervals between 2 photons by interference,” Physical Review Letters, vol. 59, no. 18, pp. 2044-2046, Nov (1987)

[17] Phys. Rev. Lett 59, 2153 (1987)

[18] D. Deutsch, “QUANTUM-THEORY, THE CHURCH-TURING PRINCIPLE AND THE UNIVERSAL QUANTUM COMPUTER,” Proceedings of the Royal Society of London Series a-Mathematical Physical and Engineering Sciences, vol. 400, no. 1818, pp. 97-117, (1985)

[19] P. W. Shor, “ALGORITHMS FOR QUANTUM COMPUTATION – DISCRETE LOGARITHMS AND FACTORING,” in 35th Annual Symposium on Foundations of Computer Science, Proceedings, S. Goldwasser Ed., (Annual Symposium on Foundations of Computer Science, 1994, pp. 124-134.

[20] F. Arute et al., “Quantum supremacy using a programmable superconducting processor,” Nature, vol. 574, no. 7779, pp. 505-+, Oct 24 (2019)

[21] H.-S. Zhong et al., “Quantum computational advantage using photons,” Science, vol. 370, no. 6523, p. 1460, (2020)