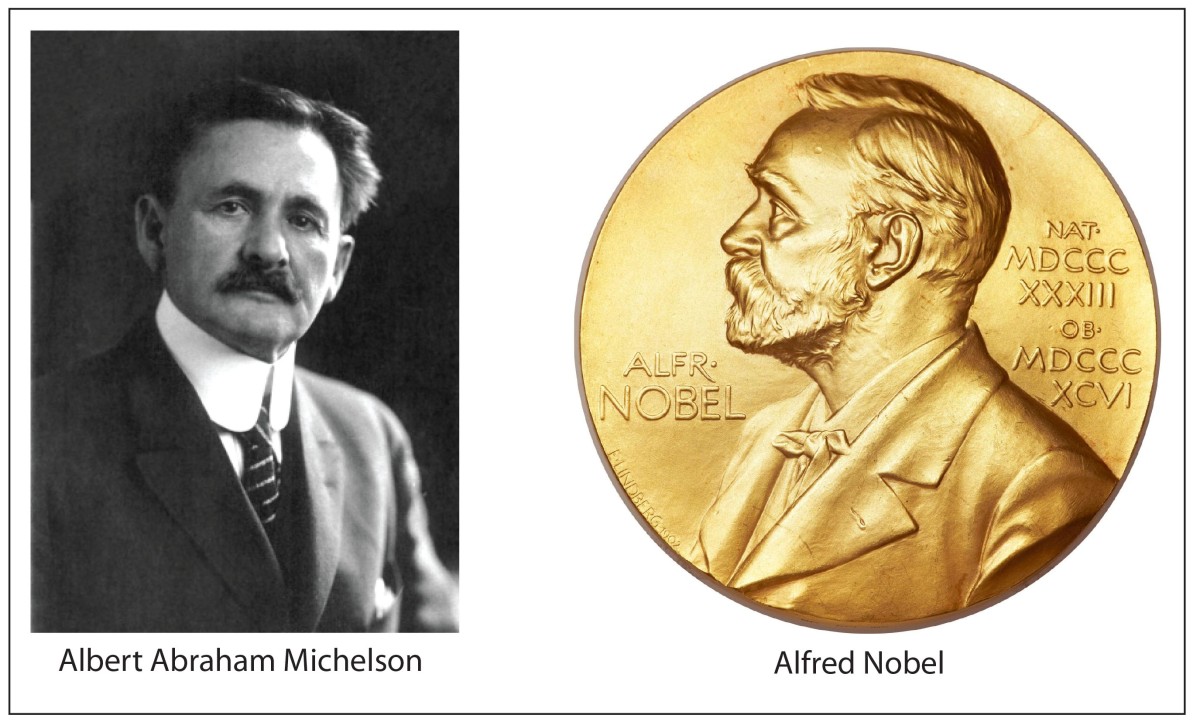

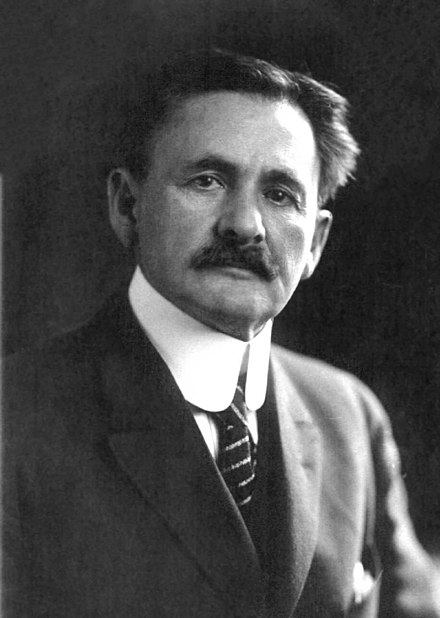

Albert Michelson was the first American to win a Nobel Prize in science. He was awarded the Nobel Prize in physics in 1907 for the invention of his eponymous interferometer and for its development as a precision tool for metrology. On board ship traveling to Sweden from London to receive his medal, he was insulted by the British author Rudyard Kipling (that year’s Nobel Laureate in literature) who quipped that America was filled with ignorant masses who wouldn’t amount to anything.

Notwithstanding Kipling’s prediction, across the following century, Americans were awarded 96 Nobel prizes in physics. The next closest nationalities were Germany with 28, the United Kingdom with 25 and France with 18. These are ratios of 3:1, 4:1 and 5:1. Why was the United States so dominant, and why was Rudyard Kipling so wrong?

At the same time that American scientists were garnering the lion’s share of Nobel prizes in physics in the 20th century, the American real (inflation-adjusted) gross-domestic-product (GDP) grew from 60 billion dollars to 20 trillion dollars, making up about a third of the world-wide GDP, even though it has only about 5% of the world population. So once again, why was the United States so dominant across the last century? What factors contributed to this success?

The answers are complicated, with many contributing factors and lots of shades of gray. But two factors stand out that grew hand-in-hand over the century; these are:

1) The striking rise of American elite universities, and

2) The significant gain in the US brain trust through immigration

Albert Michelson is a case in point.

The Firestorms of Albert Michelson

Albert Abraham Michelson was, to some, an undesirable immigrant, born poor in Poland to a Jewish family who made the arduous journey through the Panama Canal in the second wave of 49ers swarming over the California gold country. Michelson grew up in the Wild West, first in the rough town of Murphy’s Camp in California, in foothills of the Sierras. After his father’s supply store went up in flames, they moved to Virginia City, Nevada. His younger brother Charlie lived by the gun (after Michelson had left home), providing meat and protection for supply trains during the Apache wars in the Southwest. This was America in the raw.

Yet Michelson was a prodigy. He outgrew the meager educational possibilities in the mining towns, so his family scraped together enough money to send him to a school in San Francisco, where he excelled. Later, in Virginia City, an academic competition was held for a special appointment to the Naval Academy in Annapolis, and Michelson tied for first place, but the appointment went to the other student who was the son of a Civil War Vet.

With the support of the local Jewish community, Michelson took a train to Washington DC (traveling on the newly-completed Transcontinental Railway, passing over the spot where a golden spike had been driven one month prior into a railroad tie made of Californian laurel) to make his case directly. He met with President Grant at the White House, but all the slots at Annapolis had been filled. Undaunted, Michelson camped out for three days in the waiting room of the office of an Annapolis Admiral, who finally relented and allowed Michelson to take the entrance exam. Still, there was no place for him at the Academy.

Discouraged, Michelson bought a ticket and boarded the train for home. One can only imagine his shock when he heard his name called out by a someone walking down the car aisle. It was a courier from the White House. Michelson met again with Grant, who made an extraordinary extra appointment for Michelson at Annapolis; the Admiral had made his case for him. With no time to return home, he was on board ship for his first training cruise within a week, returning a month later to start classes.

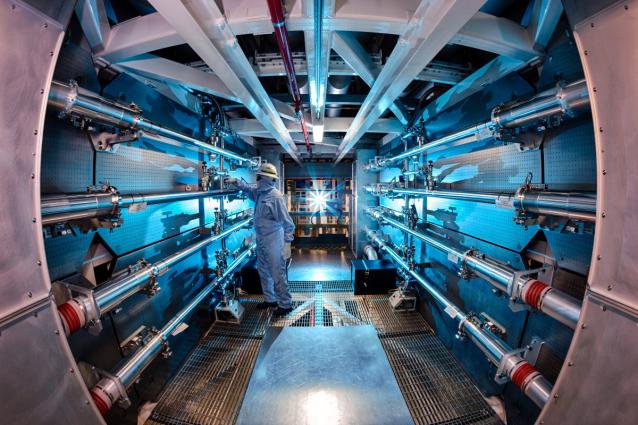

Years later, as Michelson prepared, with Edmund Morley, to perform the most sensitive test ever made of the motion of the Earth, using his recently-invented “Michelson Interferometer”, the building with his lab went up in flames, just like his father’s goods store had done years before. This was a trying time for Michelson. His first marriage was on the rocks, and he had just recovered from having a nervous breakdown (his wife at one point tried to have him committed to an insane asylum from where patients rarely ever returned). Yet with Morley’s help, they completed the measurement.

To Michelson’s dismay, the exquisite experiment with the finest sensitivity—that should have detected a large deviation of the fringes depending on the orientation of the interferometer relative to the motion of the Earth through space—gave a null result. They published their findings, anyway, as one more puzzle in the question of the speed of light, little knowing how profound this “Michelson-Morley” experiment would be in the history of modern physics and the subsequent development of the relativity theory of Albert Einstein (another immigrant).

Putting the disappointing null result behind him, Michelson next turned his ultra-sensitive interferometer to the problem of replacing the platinum meter-bar standard in Paris with a new standard that was much more fundamental—wavelengths of light. This work, unlike his null result, led to practical success for which he was awarded the Nobel Prize in 1907 (not for his null result with Morley).

Michelson’s Nobel Prize in physics in 1907 did not immediately open the floodgates. Sixteen years passed before the next Nobel in physics went to an American (Robert Millikan). But after 1936 (as many exiles from fascism in Europe immigrated to the US) Americans were regularly among the prize winners.

List of American Nobel Prizes in Physics

* (I) designates an immigrant.

- 1907 Albert Michelson (I) Optical precision instruments and metrology

- 1923 Robert Millikan Elementary charge and photoelectric effect

- 1927 Arthur Compton The Compton effect

- 1936 Carl David Anderson Discovery of the positron

- 1937 Clinton Davisson Diffraction of electrons by crystals

- 1939 Ernest Lawrence Invention of the cyclotron

- 1943 Otto Stern (I) Magnetic moment of the proton

- 1944 Isidor Isaac Rabi (I) Magnetic properties of atomic nuclei

- 1946 Percy Bridgman High pressure physics

- 1952 E. M. Purcell Nuclear magnetic precision measurements

- 1952 Felix Bloch (I) Nuclear magnetic precision measurements

- 1955 Willis Lamb Fine structure of the hydrogen spectrum

- 1955 Polykarp Kusch (I) Magnetic moment of the electron

- 1956 William Shockley (I) Discovery of the transistor effect

- 1956 John Bardeen Discovery of the transistor effect

- 1956 Walter H. Brattain (I) Discovery of the transistor effect

- 1957 Chen Ning Yang (I) Parity laws of elementary particles

- 1957 Tsung-Dao Lee (I) Parity laws of elementary particles

- 1959 Owen Chamberlain Discovery of the antiproton

- 1959 Emilio Segrè (I) Discovery of the antiproton

- 1960 Donald Glaser Invention of the bubble chamber

- 1961 Robert Hofstadter The structure of nucleons

- 1963 Maria Goeppert-Mayer (I) Nuclear shell structure

- 1963 Eugene Wigner (I) Fundamental symmetry principles

- 1964 Charles Townes Quantum electronics

- 1965 Richard Feynman Quantum electrodynamics

- 1965 Julian Schwinger Quantum electrodynamics

- 1967 Hans Bethe (I) Theory of nuclear reactions

- 1968 Luis Alvarez Hydrogen bubble chamber

- 1969 Murray Gell-Mann Classification of elementary particles and interactions

- 1972 John Bardeen Theory of superconductivity

- 1972 Leon N. Cooper Theory of superconductivity

- 1972 Robert Schrieffer Theory of superconductivity

- 1973 Ivar Giaever (I) Tunneling phenomena

- 1975 Ben Roy Mottelson The structure of the atomic nucleus

- 1975 James Rainwater The structure of the atomic nucleus

- 1976 Burton Richter Discovery of a heavy elementary particle

- 1976 Samuel C. C. Ting Discovery of a heavy elementary particle

- 1977 Philip Anderson Magnetic and disordered systems

- 1977 John van Vleck Magnetic and disordered systems

- 1978 Robert Wilson Discovery of cosmic microwave background radiation

- 1978 Arno Penzias (I) Discovery of cosmic microwave background radiation

- 1979 Steven Weinberg Unified weak and electromagnetic interaction

- 1979 Sheldon Glashow Unified weak and electromagnetic interaction

- 1980 James Cronin Symmetry principles in the decay of neutral K-mesons

- 1980 Val Fitch Symmetry principles in the decay of neutral K-mesons

- 1981 Nicolaas Bloembergen (I) Nonlinear Optics

- 1981 Arthur Schawlow Development of laser spectroscopy

- 1982 Kenneth Wilson Theory for critical phenomena and phase transitions

- 1983 William Fowler Formation of the chemical elements in the universe

- 1983 Subrahmanyan Chandrasekhar (I) The evolution of the stars

- 1988 Leon Lederman Discovery of the muon neutrino

- 1988 Melvin Schwartz Discovery of the muon neutrino

- 1988 Jack Steinberger (I) Discovery of the muon neutrino

- 1989 Hans Dehmelt (I) Ion trap

- 1989 Norman Ramsey Atomic clocks

- 1990 Jerome Friedman Deep inelastic scattering of electrons on nucleons

- 1990 Henry Kendall Deep inelastic scattering of electrons on nucleons

- 1993 Russell Hulse Discovery of a new type of pulsar

- 1993 Joseph Taylor Jr. Discovery of a new type of pulsar

- 1994 Clifford Shull Neutron diffraction

- 1995 Martin Perl Discovery of the tau lepton

- 1995 Frederick Reines Detection of the neutrino

- 1996 David Lee Discovery of superfluidity in helium-3

- 1996 Douglas Osheroff Discovery of superfluidity in helium-3

- 1996 Robert Richardson Discovery of superfluidity in helium-3

- 1997 Steven Chu Laser atom traps

- 1997 William Phillips Laser atom traps

- 1998 Horst Störmer (I) Fractionally charged quantum Hall effect

- 1998 Robert Laughlin Fractionally charged quantum Hall effect

- 1998 Daniel Tsui (I) Fractionally charged quantum Hall effect

- 2000 Jack Kilby Integrated circuit

- 2001 Eric Cornell Bose-Einstein condensation

- 2001 Carl Wieman Bose-Einstein condensation

- 2002 Raymond Davis Jr. Cosmic neutrinos

- 2002 Riccardo Giacconi (I) Cosmic X-ray sources

- 2003 Anthony Leggett (I) The theory of superconductors and superfluids

- 2003 Alexei Abrikosov (I) The theory of superconductors and superfluids

- 2004 David Gross Asymptotic freedom in the strong interaction

- 2004 H. David Politzer Asymptotic freedom in the strong interaction

- 2004 Frank Wilczek Asymptotic freedom in the strong interaction

- 2005 John Hall Quantum theory of optical coherence

- 2005 Roy Glauber Quantum theory of optical coherence

- 2006 John Mather Anisotropy of the cosmic background radiation

- 2006 George Smoot Anisotropy of the cosmic background radiation

- 2008 Yoichiro Nambu (I) Spontaneous broken symmetry in subatomic physics

- 2009 Willard Boyle (I) CCD sensor

- 2009 George Smith CCD sensor

- 2009 Charles Kao (I) Fiber optics

- 2011 Saul Perlmutter Accelerating expansion of the Universe

- 2011 Brian Schmidt Accelerating expansion of the Universe

- 2011 Adam Riess Accelerating expansion of the Universe

- 2012 David Wineland Atom Optics

- 2014 Shuji Nakamura (I) Blue light-emitting diodes

- 2016 F. Duncan Haldane (I) Topological phase transitions

- 2016 John Kosterlitz (I) Topological phase transitions

- 2017 Rainer Weiss (I) LIGO detector and gravitational waves

- 2017 Kip Thorne LIGO detector and gravitational waves

- 2017 Barry Barish LIGO detector and gravitational waves

- 2018 Arthur Ashkin Optical tweezers

- 2019 Jim Peebles (I) Cosmology

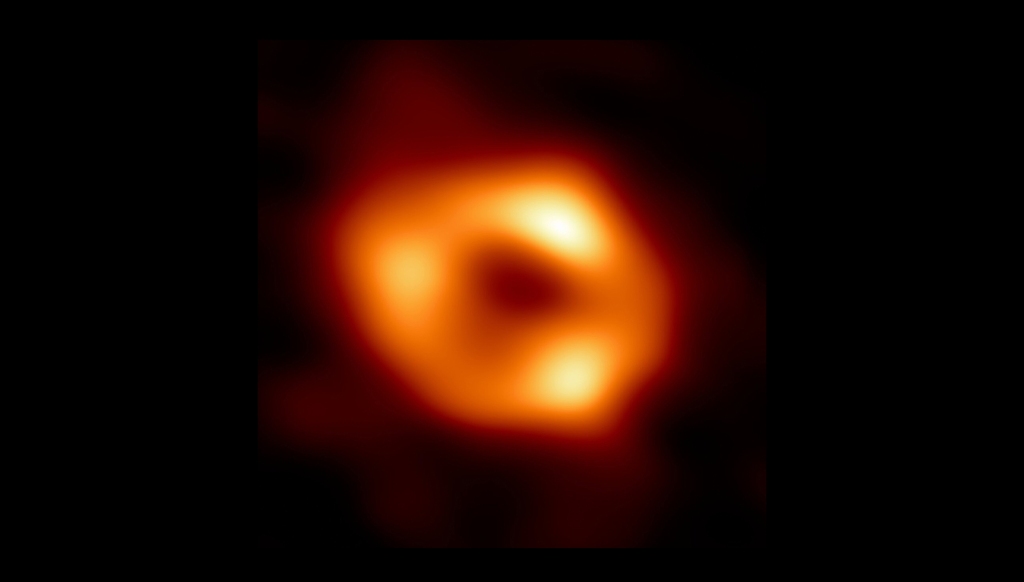

- 2020 Andrea Ghez Milky Way black hole

- 2021 Syukuro Manabe (I) Global warming

- 2022 John Clauser Quantum entanglement

(Note: This list does not include Enrico Fermi, who was awarded the Nobel Prize while in Italy. After traveling to Stockholm to receive the award, he did not return to Italy, but went to the US to protect his Jewish wife from the new race laws enacted by the nationalist government of Italy. There are many additional Nobel prize winners not on this list (like Albert Einstein) who received the Nobel Prize while in their own country but who then came to the US to teach at US institutions.)

Immigration and Elite Universities

A look at the data behind the previous list tells a striking story: 1) Nearly all of the American Nobel Prizes in physics were awarded for work performed at elite American universities; 2) Roughly a third of the prizes went to immigrants. And for those prize winners who were not immigrants themselves, many were taught by, or studied under, immigrant professors at those elite universities.

Elite universities are not just the source of Nobel Prizes, but are engines of the economy. The Tech Sector may contribute only 10% of the US GDP, but 85% of our GDP is attributed to “innovation”, much of coming out of our universities. Our “inventive” economy is driving the American standard of living and keeps us competitive in the worldwide market.

Today, elite universities, as well as immigration, are under attack by forces who want to make America great again. Legislatures in some states have passed laws restricting how those universities hire and teach, and more states are following suite. Some new state laws restrict where Chinese-born professors, who are teaching and conducting research at American universities, can or cannot buy houses. And some members of Congress recently ambushed the leaders of a few of our most elite universities (who failed spectacularly to use common sense), using the excuse of a non-academic issue to turn universities into a metaphor for the supposed evils of elitism.

But the forces seeking to make America great again may be undermining the very thing that made America great in the first place.

They want to cook the goose, but they are overlooking the golden eggs.