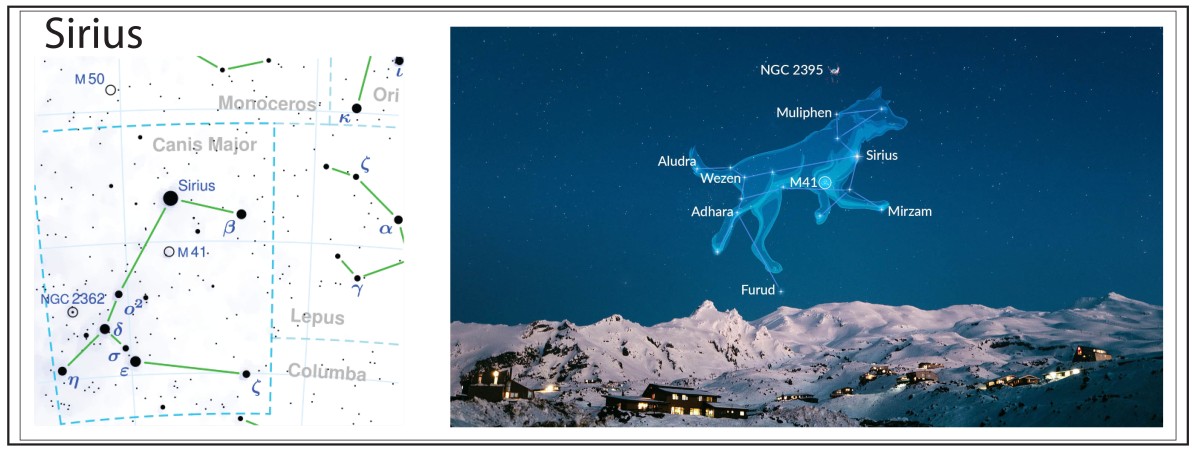

The constellation Orion strides high across the heavens on cold crisp winter nights in the North, followed at his heel by his constant companion, Canis Major, the Great Dog. Blazing blue from the Dog’s proud chest is the star Sirius, the Dog Star, the brightest star in the night sky. Although it is only the seventh closest star system to our sun, the other six systems host dimmer dwarf stars. Sirius, on the other hand, is a young bright star burning blue in the night. It is an infant star, really, only as old as 5% the age of our sun, coming into being when Dinosaurs walked our planet.

The Sirius star system is a microcosm of mankind’s struggle to understand the Universe. Because it is close and bright, it has become the de facto bench-test for new theories of astrophysics as well as for new astronomical imaging technologies. It has played this role from the earliest days of history, when it was an element of religion rather than of science, down to the modern age as it continues to test and challenge new ideas about quantum matter and extreme physics.

Sirius Through the Ages

To the ancient Egyptians, Sirius was the star Sopdet, the welcome herald of the flooding of the Nile when it rose in the early morning sky of autumn. The star was associated with Isis of the cow constellation Hathor (Canis Major) following closely behind Osiris (Orion). The importance of the annual floods for the well-being of the ancient culture cannot be underestimated, and entire religions full of symbolic significance revolved around the heliacal rising of Sirius.

To the Greeks, Sirius was always Sirius, although no one even as far back as Hesiod in the 7th century BC could recall where it got its name. It was the dog star, as it was also to the Persians and the Hindus who called it Tishtrya and Tishya, respectively. The loss of the initial “T” of these related Indo-European languages is a historical sound shift in relation to “S”, indicating that the name of the star dates back at least as far as the divergence of the Indo-European languages around the fourth millennium BC. (Even more intriguing is the same association of Sirius with dogs and wolves by the ancient Chinese and by Alaskan Innuits, as well as by many American Indian tribes, suggesting that the cultural significance of the star, if not its name, may have propagated across Asia and the Bering Strait as far back as the end of the last Ice Age.) As the brightest star of the sky, this speaks to an enduring significance for Sirius, dating back to the beginning of human awareness of our place in nature. No culture was unaware of this astronomical companion to the Sun and Moon and Planets.

The Greeks, too, saw Sirius as a harbinger, not for life-giving floods, but rather of the sweltering heat of late summer. Homer, in the Iliad, famously wrote:

And aging Priam was the first to see him sparkling on the plain, bright as that star in autumn rising, whose unclouded rays shine out amid a throng of stars at dusk— the one they call Orion's dog, most brilliant, yes, but baleful as a sign: it brings great fever to frail men. So pure and bright the bronze gear blazed upon him as he ran.

The Romans expanded on this view, describing “the dog days of summer”, which is a phrase that echoes till today as we wait for the coming coolness of autumn days.

The Heavens Move

The irony of the Copernican system of the universe, when it was proposed in 1543 by Nicolaus Copernicus, is that it took stars that moved persistently through the heavens and fixed them in the sky, unmovable. The “fixed stars” became the accepted norm for several centuries, until the peripatetic Edmund Halley (1656 – 1742) wondered if the stars really did not move. From Newton’s new work on celestial dynamics (the famous Principia, which Halley generously paid out of his own pocket to have published not only because of his friendship with Newton, but because Halley believed it to be a monumental work that needed to be widely known), it was understood that gravitational effects would act on the stars and should cause them to move.

In 1710 Halley began studying the accurate star-location records of Ptolemy from one and a half millennia earlier and compared them with what he could see in the night sky. He realized that the star Sirius had shifted in the sky by an angular distance equivalent to the diameter of the moon. Other bright stars, like Arcturus and Procyon, also showed discrepancies from Ptolemy. On the other hand, dimmer stars, that Halley reasoned were farther away, showed no discernible shifts in 1500 years. At a time when stellar parallax, the apparent shift in star locations caused by the movement of the Earth, had not yet been detected, Halley had found an alternative way to get at least some ranked distances to the stars based on their proper motion through the universe. Closer stars to the Earth would show larger angular displacements over 1500 years than stars farther away. By being the closest bright star to Earth, Sirius had become a testbed for observations and theories of the motions of stars. With the confidence of the confirmation of the nearness of Sirius to the Earth, Jacques Cassini claimed in 1714 to have measured the parallax of Sirius, but Halley refuted this claim in 1720. Parallax would remain elusive for another hundred years to come.

The Sound of Sirius

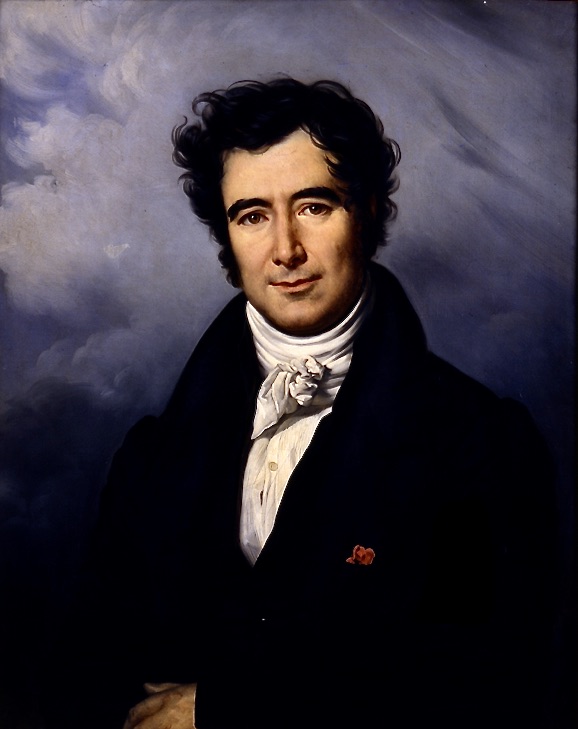

Of all the discoveries that emerged from nineteenth century physics—Young’s fringes, Biot-Savart law, Fresnel lens, Carnot cycle, Faraday effect, Maxwell’s equations, Michelson interferometer—only one is heard daily—the Doppler effect [1]. Doppler’s name is invoked every time you turn on the evening news to watch Doppler weather radar. Doppler’s effect is experienced as you wait by the side of the road for a car to pass by or a jet to fly overhead. Einstein may have the most famous name in physics, but Doppler’s is certainly the most commonly used.

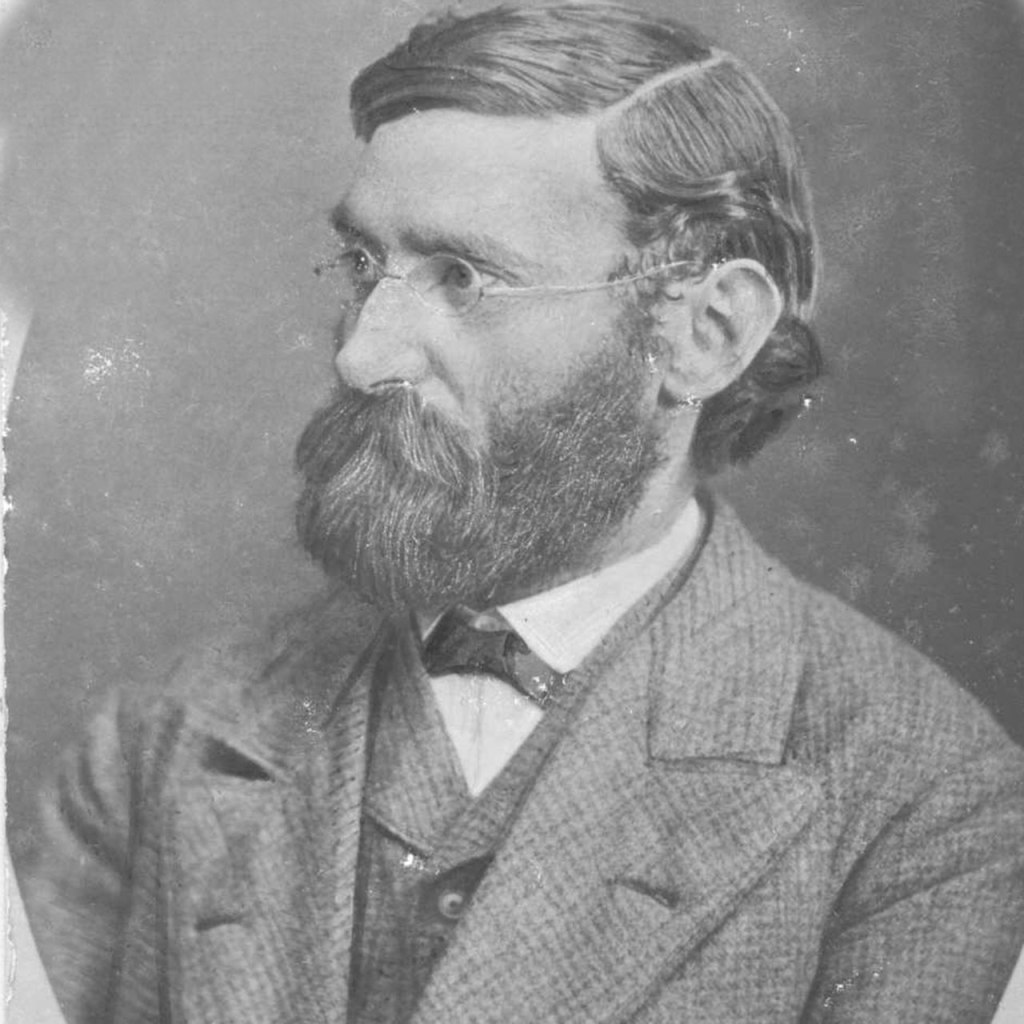

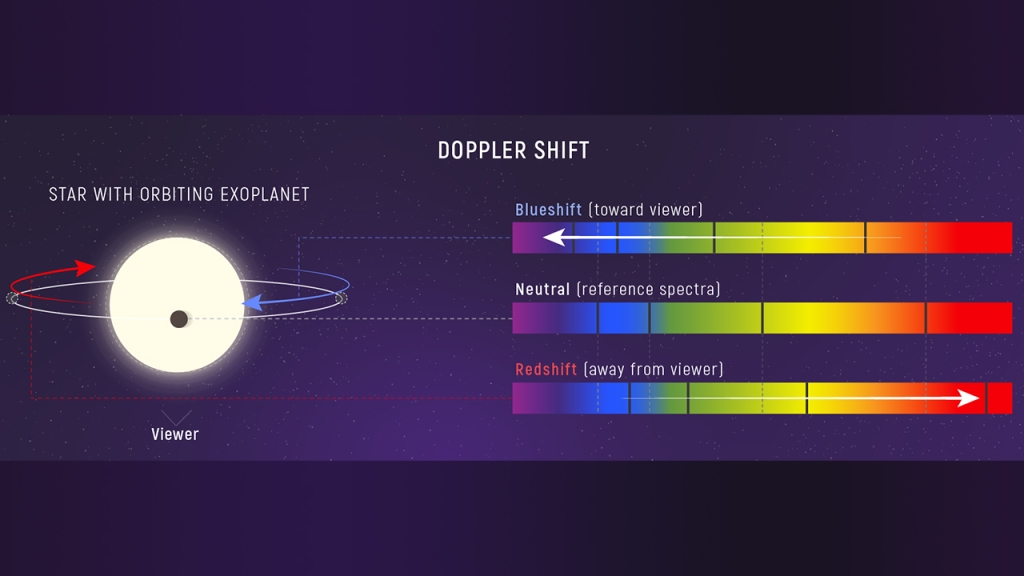

Although experimental support for the acoustic Doppler effect accumulated quickly, corresponding demonstrations of the optical Doppler effect were slow to emerge. The breakthrough in the optical Doppler effect was made by William Huggins (1824-1910). Huggins was an early pioneer in astronomical spectroscopy and was famous for having discovered that some bright nebulae consist of atomic gases (planetary nebula in our own galaxy) while others (later recognized as distant galaxies) consist of unresolved emitting stars. Huggins was intrigued by the possibility of using the optical Doppler effect to measure the speed of stars, and he corresponded with James Clerk Maxwell (1831-1879) to confirm the soundness of Doppler’s arguments, which Maxwell corroborated using his new electromagnetic theory. With the resulting confidence, Huggins turned his attention to the brightest star in the heavens, Sirius, and on May 14, 1868, he read a paper to the Royal Society of London claiming an observation of Doppler shifts in the spectral lines of the star Sirius consistent with a speed of about 50 km/sec [2].

The importance of Huggins’ report on the Doppler effect from Sirius was more psychological than scientifically accurate, because it convinced the scientific community that the optical Doppler effect existed. Around this time the German astronomer Hermann Carl Vogel (1841 – 1907) of the Potsdam Observatory began working with a new spectrograph designed by Johann Zöllner from Leipzig [3] to improve the measurements of the radial velocity of stars (the speed along the line of sight). He was aware that the many values quoted by Huggins and others for stellar velocities were nearly the same as the uncertainties in their measurements. Vogel installed photographic capabilities in the telescope and spectrograph at the Potsdam Observatory [4] in 1887 and began making observations of Doppler line shifts in stars through 1890. He published an initial progress report in 1891, and then a definitive paper in 1892 that provided the first accurate stellar radial velocities [5]. Fifty years after Doppler read his paper to the Royal Bohemian Society of Science (in 1842 to a paltry crowd of only a few scientists), the Doppler effect had become an established workhorse of quantitative astrophysics. A laboratory demonstration of the optical Doppler effect was finally achieved in 1901 by Aristarkh Belopolsky (1854-1934), a Russian astronomer, by constructing a device with a narrow-linewidth light source and rapidly rotating mirrors [6].

White Dwarf

While measuring the position of Sirius to unprecedented precision, the German astronomer Friedrich Wilhelm Bessel (1784 – 1846) noticed a slow shift in its position. (This is the same Bessel as “Bessel function” fame, although the functions were originally developed by Daniel Bernoulli and Bessel later generalized them.) Bessel deduced that Sirius must have an unseen companion with an orbital of around 50 years. This companion was discovered by accident in 1862 during a test run of a new lens manufactured by the Clark&Sons glass manufacturing company prior to delivery to Northwestern University in Chicago. (The lens was originally ordered by the University of Mississippi in 1860, but after the Civil War broke out, the Massachusetts-based Clark company put it up for bid. Harvard wanted it, but Northwestern got it.) Sirius itself was redesignated Sirius A, while this new star was designated Sirius B (and sometimes called “The Pup”).

The Pup’s spectrum was measured in 1915 by Walter Adams (1876 – 1956) which put it in the newly-formed class of “white dwarf” stars that were very small but, unlike other types of dwarf stars, they had very hot (white) spectra. The deflection of the orbit of Sirius A allowed its mass to be estimated at about one solar mass, which was normal for a dwarf star. Furthermore, its brightness and surface temperature allowed its density to be estimated, but here an incredible number came out: the density of Sirius B was about 30,000 times greater than the density of the sun! Astronomers at the time thought that this was impossible, and Arthur Eddington, who was the expert in star formation, called it “nonsense”. This nonsense withstood all attempts to explain it for over a decade.

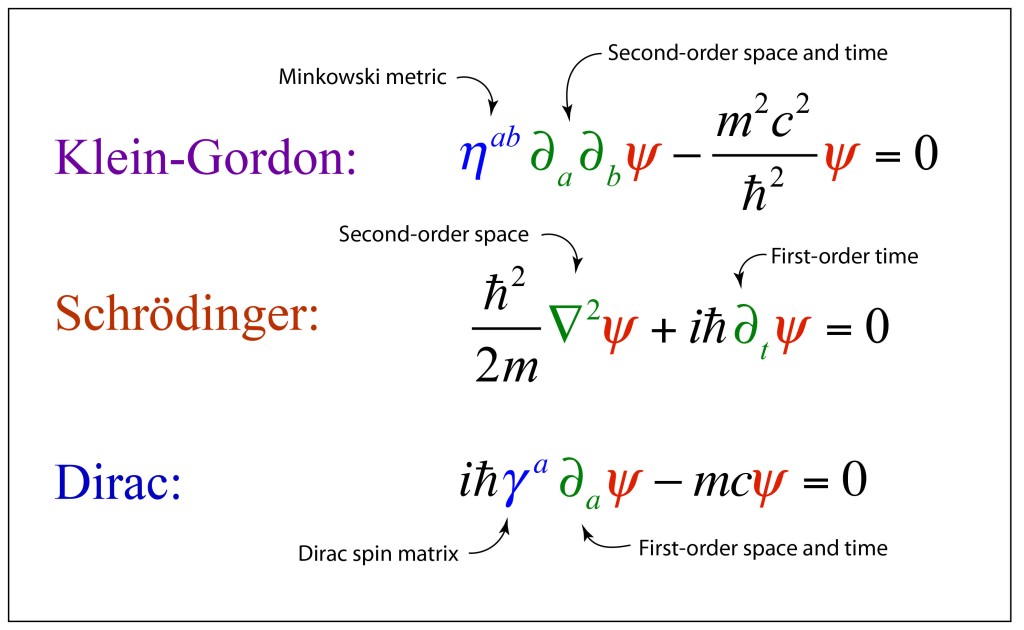

In 1926, R. H. Fowler (1889 – 1944) at Cambridge University in England applied the newly-developed theory of quantum mechanics and the Pauli exclusion principle to the problem of such ultra-dense matter. He found that the Fermi sea of electrons provided a type of pressure, called degeneracy pressure, that counteracted the gravitational pressure that threatened to collapse the star under its own weight. Several years later, Subrahmanyan Chandrasekhar calculated the upper limit for white dwarfs using relativistic effects and accurate density profiles and found that a white dwarf with a mass greater than about 1.5 times the mass of the sun would no longer be supported by the electron degeneracy pressure and would suffer gravitational collapse. At the time, the question of what it would collapse to was unknown, although it was later understood that it would collapse to a neutron star. Sirius B, at about one solar mass, is well within the stable range of white dwarfs.

But this was not the end of the story for Sirius B [7]. At around the time that Adams was measuring the spectrum of the white dwarf, Einstein was predicting that light emerging from a dense star would have its wavelengths gravitationally redshifted relative to its usual wavelength. This was one of the three classic tests he proposed for his new theory of General Relativity. (1 – The precession of the perihelion of Mercury. 2 – The deflection of light by gravity. 3 – The gravitational redshift of photons rising out of a gravity well.) Adams announced in 1925 (after the deflection of light by gravity had been confirmed by Eddington in 1919) that he had measured the gravitational redshift. Unfortunately, it was later surmised that he had not measured the gravitational effect but had actually measured Doppler-shifted spectra because of the rotational motion of the star. The true gravitational redshift of Sirius B was finally measured in 1971, although the redshift of another white dwarf, 40 Eridani B, had already been measured in 1954.

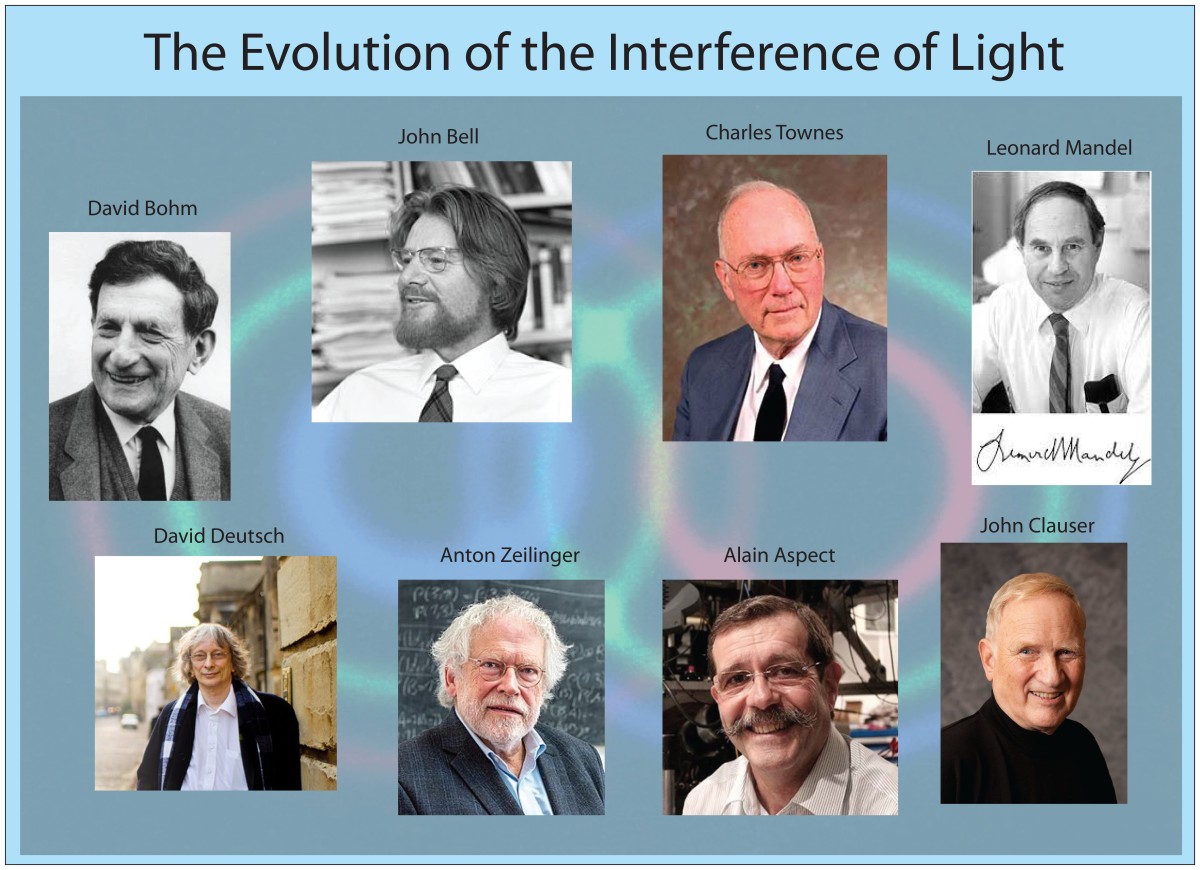

Static Interference

The quantum nature of light is an elusive quality that requires second-order experiments of intensity fluctuations to elucidate them, rather than using average values of intensity. But even in second-order experiments, the manifestations of quantum phenomenon are still subtle, as evidenced by an intense controversy that was launched by optical experiments performed in the 1950’s by a radio astronomer, Robert Hanbury Brown (1916 – 2002). (For the full story, see Chapter 4 in my book Interference from Oxford (2023) [8]).

Hanbury Brown (he never went by his first name) was born in Aruvankandu, India, the son of a British army officer. He never seemed destined for great things, receiving an unremarkable education that led to a degree in radio engineering from a technical college in 1935. He hoped to get a PhD in radio technology, and he even received a scholarship to study at Imperial College in London, when he was urged by the rector of the university, Sir Henry Tizard, to give up his plans and join an effort to develop defensive radar against a growing threat from Nazi Germany as it aggressively rearmed after abandoning the punitive Versailles Treaty. Hanbury Brown began the most exciting and unnerving five years of his life, right in the middle of the early development of radar defense, leading up to the crucial role it played in the Battle of Britain in 1940 and the Blitz from 1940 to 1941. Partly due to the success of radar, Hitler halted night-time raids in the Spring of 1941, and England escaped invasion.

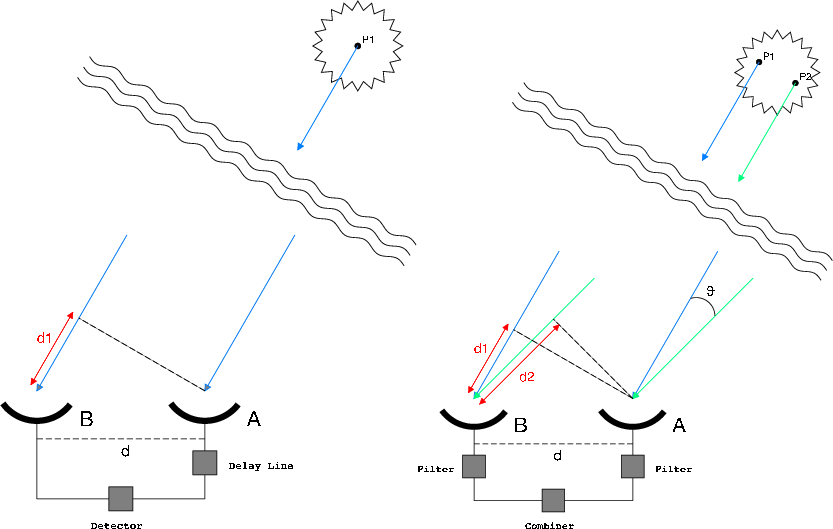

In 1949, fourteen years after he had originally planned to start his PhD, Hanbury Brown enrolled at the relatively ripe age of 33 at the University of Manchester. Because of his background in radar, his faculty advisor told him to look into the new field of radio astronomy that was just getting started, and Manchester was a major player because it administrated the Jodrell Bank Observatory, which was one of the first and largest radio astronomy observatories in the World. Hanbury Brown was soon applying all he had learned about radar transmitters and receivers to the new field, focusing particularly on aspects of radio interferometry after Martin Ryle (1918 – 1984) at Cambridge with Derek Vonberg (1921 – 2015) developed the first radio interferometer to measure the angular size of the sun [9] and of radio sources on the Sun’s surface that were related to sunspots [10]. Despite the success of their measurements, their small interferometer was unable to measure the size of other astronomical sources. From Michelson’s formula for stellar interferometry, longer baselines between two separated receivers would be required to measure smaller angular sizes. For his PhD project, Hanbury Brown was given the task of designing a radio interferometer to resolve the two strongest radio sources in the sky, Cygnus A and Cassiopeia A, whose angular sizes were unknown. As he started the project, he was confronted with the problem of distributing a stable reference signal to receivers that might be very far apart, maybe even thousands of kilometers, a problem that had no easy solution.

After grappling with this technical problem for months without success, late one night in 1949 Hanbury Brown had an epiphany [11], wondering what would happen if the two separate radio antennas measured only intensities rather than fields. The intensity in a radio telescope fluctuates in time like random noise. If that random noise were measured at two separated receivers while trained on a common source, would those noise patterns look the same? After a few days considering this question, he convinced himself that the noise would indeed share common features, and the degree to which the two noise traces were similar should depend on the size of the source and the distance between the two receivers, just like Michelson’s fringe visibility. But his arguments were back-of-the-envelope, so he set out to find someone with the mathematical skills to do it more rigorously. He found Richard Twiss.

Richard Quentin Twiss (1920 – 2005), like Hanbury Brown, was born in India to British parents but had followed a more prestigious educational path, taking the Mathematical Tripos exam at Cambridge in 1941 and receiving his PhD from MIT in the United States in 1949. He had just returned to England, joining the research division of the armed services located north of London, when he received a call from Hanbury Brown at the Jodrell Bank radio astronomy laboratory in Manchester. Twiss travelled to meet Hanbury Brown in Manchester, who put him up in his flat in the neighboring town of Wilmslow. The two set up the mathematical assumptions behind the new “intensity interferometer” and worked late into the night. When Hanbury Brown finally went to bed, Twiss was still figuring the numbers. The next morning, the tall and lanky Twiss appeared in his silk dressing gown in the kitchen and told Hanbury Brown, “This idea of yours is no good, it doesn’t work”[12]—it would never be strong enough to detect the intensity from stars. However, after haggling over the details of some of the integrals, Hanbury Brown, and then finally Twiss, became convinced that the effect was real. Rather than fringe visibility, it was the correlation coefficient between two noise signals that would depend on the joint sizes of the source and receiver in a way that captured the same information as Michelson’s first-order fringe visibility. But because no coherent reference wave was needed for interferometric mixing, this new approach could be carried out across very large baseline distances.

After demonstrating the effect on astronomical radio sources, Hanbury Brown and Twiss took the next obvious step: optical stellar intensity interferometry. Their work had shown that photon noise correlations were analogous to Michelson fringe visibility, so the stellar intensity interferometer was expected to work similarly to the Michelson stellar interferometer—but with better stability over much longer baselines because it did not need a reference. An additional advantage was the simple light collecting requirements. Rather than needing a pair of massively expensive telescopes for high-resolution imaging, the intensity interferometer only needed to point two simple light collectors in a common direction. For this purpose, and to save money, Hanbury Brown selected two of the largest army-surplus anti-aircraft searchlights that he could find left over from the London Blitz. The lamps were removed and replaced with high-performance photomultipliers, and the units were installed on two train cars that could run along a railroad siding that crossed the Jodrell Bank grounds.

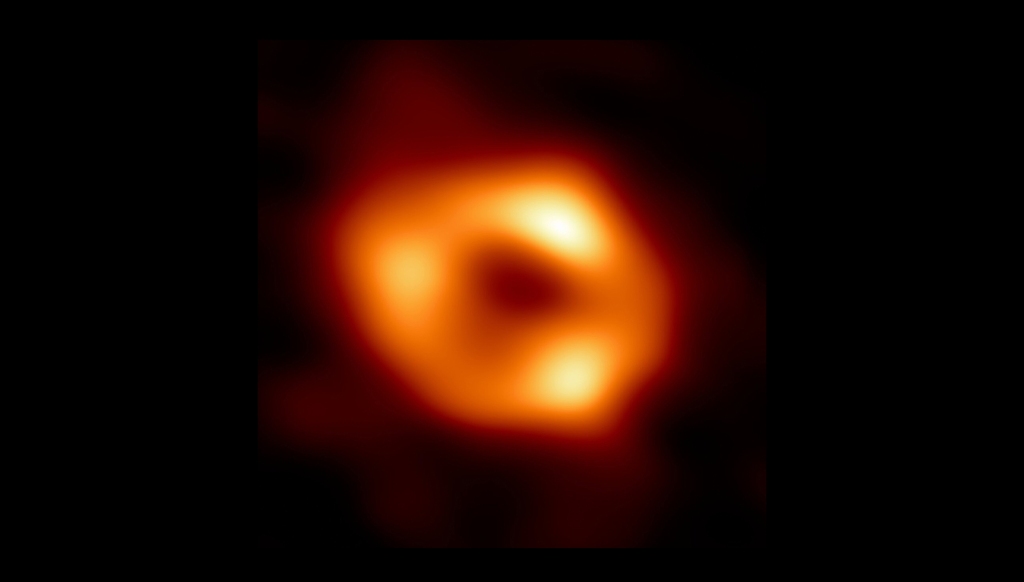

The target of the first test of the intensity interferometer was Sirius, the Dog Star. Sirius was chosen because it is the brightest star in the night sky and was close to Earth at 8.6 light years and hence would be expected to have a relatively large angular size. The observations began at the start of winter in 1955, but the legendary English weather proved an obstacle. In addition to endless weeks of cloud cover, on many nights dew formed on the reflecting mirrors, making it necessary to install heaters. It took more than three months to make 60 operational attempts to accumulate a mere 18 hours of observations [13]. But it worked! The angular size of Sirius was measured for the first time. It subtended an angle of approximately 6 milliarcseconds (mas), which was well within the expected range for such a main sequence blue star. This angle is equivalent to observing a house on the Moon from the Earth. No single non-interferometric telescope on Earth, or in Earth orbit, has that kind of resolution, even today. Once again, Sirus was the testbed of a new observational technology. Hanbury Brown and Twiss went on the measure the diameters of dozens of stars.

Adaptive Optics

Any undergraduate optics student can tell you that bigger telescopes have higher spatial resolution. But this is only true up to a point. When telescope diameters become not much bigger than about 10 inches, the images they form start to dance, caused by thermal fluctuations in the atmosphere. Large telescopes can still get “lucky” at moments when the atmosphere is quiet, but this usually only happens for a fraction of a second before the fluctuation set in again. This is the primary reason that the Hubble Space Telescope was placed in Earth orbit above the atmosphere, and why the James Webb Space Telescope is flying a million miles away from the Earth. But that is not the end of Earth-based large telescoped. The Very Large Telescope (VLT) has a primary diameter of 8 meters, and the Extremely Large Telescope (ELT), coming online soon, has an even bigger diameter of 40 meters. How do these work under the atmospheric blanket? The answer is adaptive optics.

Adaptive optics uses active feedback to measure the dancing images caused by the atmosphere and uses the information to control a flexible array of mirror elements to exactly cancel out the effects of the atmospheric fluctuations. In the early days of adaptive-optics development, the applications were more military than astronomic, but advances made in imaging enemy satellites soon was released to the astronomers. The first civilian demonstrations of adaptive optics were performed in 1977 when researchers at Bell Labs [14] and at the Space Sciences Lab at UC Berkeley [15] each made astronomical demonstrations of improved seeing of the star Sirius using adaptive optics. The field developed rapidly after that, but once again Sirius had led the way.

Star Travel

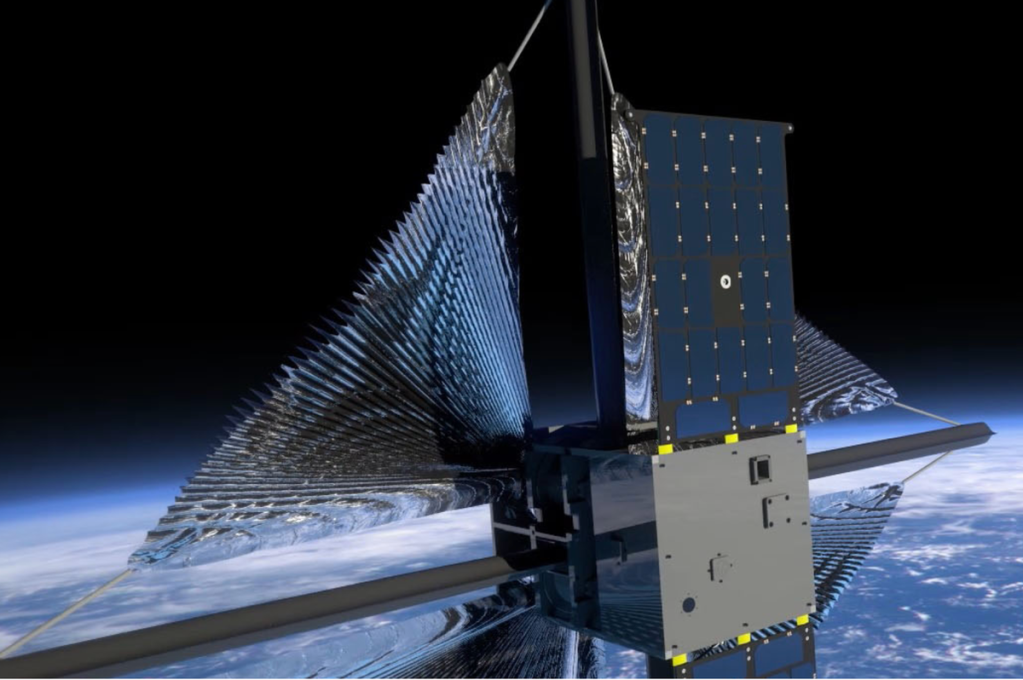

The day is fast approaching when humans will begin thinking seriously of visiting nearby stars—not in person at first, but with unmanned spacecraft that can telemeter information back to Earth. Although Sirius is not the closest star to Earth—it is 8.6 lightyears away while Alpha Centauri is almost twice as close at only 4.2 lightyears away—it may be the best target for an unmanned spacecraft. The reason is its brightness.

Stardrive technology is still in its infancy—most of it is still on drawing boards. Therefore, the only “mature” technology we have today is light pressure on solar sails. Within the next 50 years or so we will have the technical ability to launch a solar sail towards a nearby star and accelerate it to a good fraction of the speed of light. The problem is decelerating the spaceship when it arrives at its destination, otherwise it will go zipping by with only a few seconds to make measurements after its long trek there.

A better idea is to let the star light push against the solar sail to decelerate it to orbital speed by the time it arrives. That way, the spaceship can orbit the target star for years. This is a possibility with Sirius. Because it is so bright, its light can decelerate the spaceship even when it is originally moving at relativistic speeds. By one calculation, the trip to Sirius, including the deceleration and orbital insertion, should only take about 69 years [16]. That’s just one lifetime. Signals could be beaming back from Sirius by as early as 2100—within the lifetimes of today’s children.

Footnotes

[1] The section is excerpted from D. D. Nolte, The Fall and Rise of the Doppler Effect, Physics Today (2020)

[2] W. Huggins, “Further observations on the spectra of some of the stars and nebulae, with an attempt to determine therefrom whether these bodies are moving towards or from the earth, also observations on the spectra of the sun and of comet II,” Philos. Trans. R. Soc. London vol. 158, pp. 529-564, 1868. The correct value is -5.5 km/sec approaching Earth. Huggins got the magnitude and even the sign wrong.

[3] in Hearnshaw, The Analysis of Starlight (Cambridge University Press, 2014), pg. 89

[4] The Potsdam Observatory was where the American Albert Michelson built his first interferometer while studying with Helmholtz in Berlin.

[5] Vogel, H. C. Publik. der astrophysik. Observ. Potsdam 1: 1. (1892)

[6] A. Belopolsky, “On an apparatus for the laboratory demonstration of the Doppler-Fizeau principle,” Astrophysical Journal, vol. 13, pp. 15-24, Jan 1901.

[7] https://adsabs.harvard.edu/full/1980QJRAS..21..246H

[8] D. D. Nolte, Interference: The History of Optical Interferometry and the Scientists who Tamed Light (Oxford University Press, 2023)

[9] M. Ryle and D. D. Vonberg, “Solar Radiation on 175 Mc/sec,” Nature, vol. 158 (1946): pp. 339-340.; K. I. Kellermann and J. M. Moran, “The development of high-resolution imaging in radio astronomy,” Annual Review of Astronomy and Astrophysics, vol. 39, (2001): pp. 457-509.

[10] M. Ryle, ” Solar radio emissions and sunspots,” Nature, vol. 161, no. 4082 (1948): pp. 136-136.

[11] R. H. Brown, The intensity interferometer; its application to astronomy (London, New York, Taylor & Francis; Halsted Press, 1974).

[12] R. H. Brown, Boffin : A personal story of the early days of radar and radio astronomy (Adam Hilger, 1991), p. 106.

[13] R. H. Brown and R. Q. Twiss. ” Test of a new type of stellar interferometer on Sirius.” Nature 178, no. 4541 (1956): pp. 1046-1048.

[14] S. L. McCall, T. R. Brown, and A. Passner, “IMPROVED OPTICAL STELLAR IMAGE USING A REAL-TIME PHASE-CORRECTION SYSTEM – INITIAL RESULTS,” Astrophysical Journal, vol. 211, no. 2, pp. 463-468, (1977)

[15] A. Buffington, F. S. Crawford, R. A. Muller, and C. D. Orth, “1ST OBSERVATORY RESULTS WITH AN IMAGE-SHARPENING TELESCOPE,” Journal of the Optical Society of America, vol. 67, no. 3, pp. 304-305, 1977 (1977)

[16] https://www.newscientist.com/article/2128443-quickest-we-could-visit-another-star-is-69-years-heres-how/