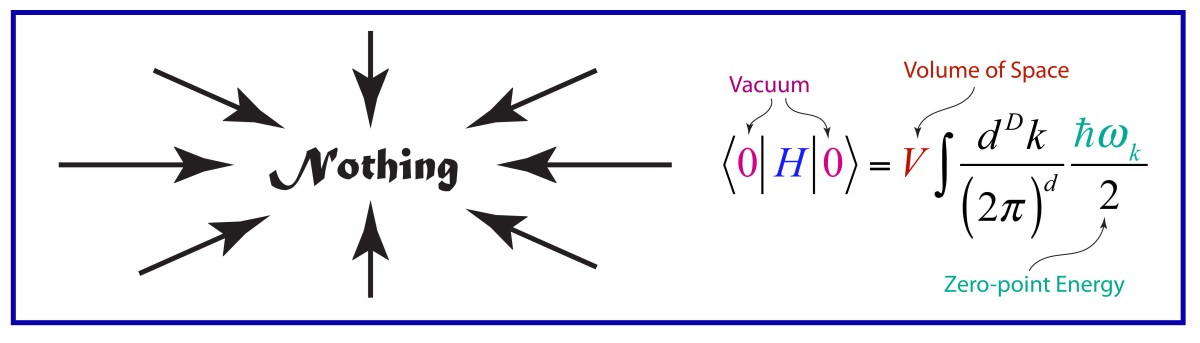

It may be hard to get excited about nothing … unless nothing is the whole ball game.

The only way we can really know what is, is by knowing what isn’t. Nothing is the backdrop against which we measure something. Experimentalists spend almost as much time doing control experiments, where nothing happens (or nothing is supposed to happen) as they spend measuring a phenomenon itself, the something.

Even the universe, full of so much something, came out of nothing during the Big Bang. And today the energy density of nothing, so-called Dark Energy, is blowing our universe apart, propelling it ever faster to a bitter cold end.

So here is a brief history of nothing, tracing how we have understood what it is, where it came from, and where is it today.

With sturdy shoulders, space stands opposing all its weight to nothingness. Where space is, there is being.

Friedrich Nietzsche

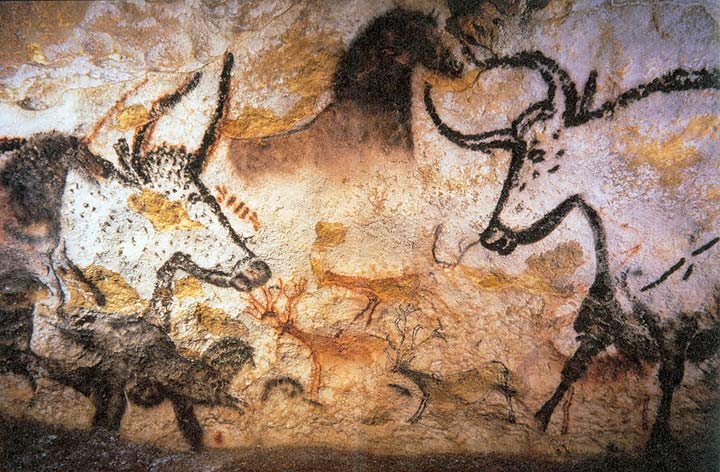

40,000 BCE – Cosmic Origins

This is a human history, about how we homo sapiens try to understand the natural world around us, so the first step on a history of nothing is the Big Bang of human consciousness that occurred sometime between 100,000 – 40,000 years ago. Some sort of collective phase transition happened in our thought process when we seem to have become aware of our own existence within the natural world. This time frame coincides with the beginning of representational art and ritual burial. This is also likely the time when human language skills reached their modern form, and when logical arguments–stories–first were told to explain our existence and origins.

Roughly two origin stories emerged from this time. One of these assumes that what is has always been, either continuously or cyclically. Buddhism and Hinduism are part of this tradition as are many of the origin philosophies of Indigenous North Americans. Another assumes that there was a beginning when everything came out of nothing. Abrahamic faiths (Let there be light!) subscribe to this creatio ex nihilo. What came before creation? Nothing!

500 BCE – Leucippus and Democritus Atomism

The Greek philosopher Leucippus and his student Democritus, living around 500 BCE, were the first to lay out the atomic theory in which the elements of substance were indivisible atoms of matter, and between the atoms of matter was void. The different materials around us were created by the different ways that these atoms collide and cluster together. Plato later adhered to this theory, developing ideas along these lines in his Timeaus.

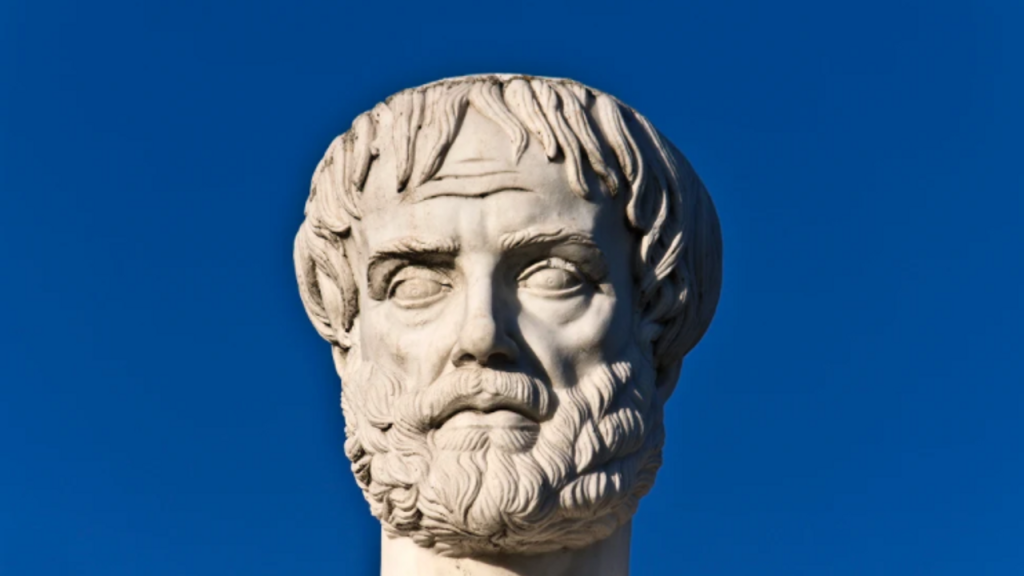

300 BCE – Aristotle Vacuum

Aristotle is famous for arguing, in his Physics Book IV, Section 8, that nature abhors a vacuum (horror vacui) because any void would be immediately filled by the imposing matter surrounding it. He also argued more philosophically that nothing, by definition, cannot exist.

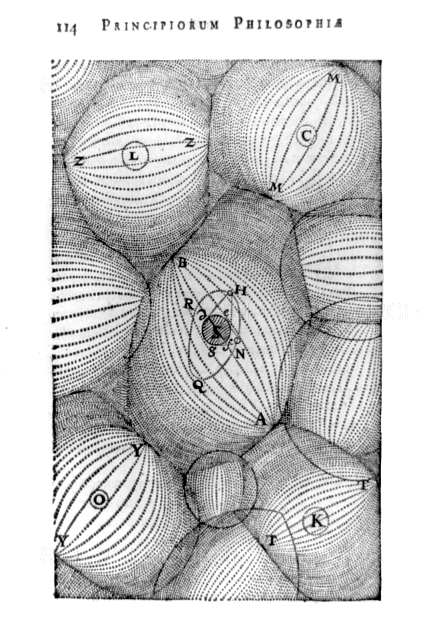

1644 – Rene Descartes Vortex Theory

Fast forward a millennia and a half, and theories of existence were finally achieving a level of sophistication that can be called “scientific”. Rene Descartes followed Aristotle’s views of the vacuum, but he extended it to the vacuum of space, filling it with an incompressible fluid in his Principles of Philosophy (1644). Just like water, laminar motion can only occur by shear, leading to vortices. Descartes was a better philosopher than mathematician, so it took Christian Huygens to apply mathematics to vortex motion to “explain” the gravitational effects of the solar system.

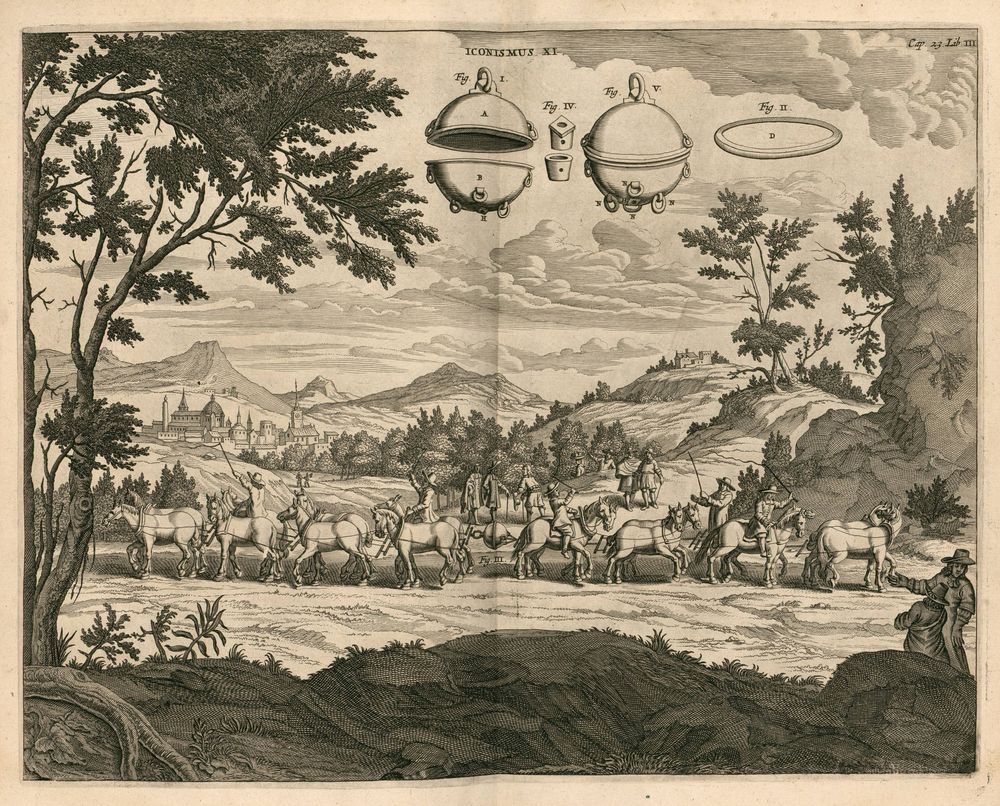

1654 – Otto von Guericke Vacuum Pump

Otto von Guericke is one of those hidden gems of the history of science, a person who almost no-one remembers today, but who was far in advance of his own day. He was a powerful politician, holding the position of Burgomeister of the city of Magdeburg for more than 30 years, helping to rebuild it after it was sacked during the Thirty Years War. He was also a diplomat, playing a key role in the reorientation of power within the Holy Roman Empire. How he had free time is anyone’s guess, but he used it to pursue scientific interests that spanned from electrostatics to his invention of the vacuum pump.

With a succession of vacuum pumps, each better than the last, von Geuricke was like a kid in a toy factory, pumping the air out of anything he could find. In the process, he showed that a vacuum would extinguish a flame and could raise water in a tube.

His most famous demonstration was, of course, the Magdeburg sphere demonstration. In 1657 he fabricated two 20-inch hemispheres that he attached together with a vacuum seal and used his vacuum pump to evacuate the air from inside. He then attached chains from the hemispheres to a team of eight horses on each side, for a total of 16 horses, who were unable to separate the spheres. This dramatically demonstrated that air exerts a force on surfaces, and that Aristotle and Descartes were wrong—nature did allow a vacuum!

1667 – Isaac Newton Action at a Distance

When it came to the vacuum, Newton was agnostic. His universal theory of gravitation posited action at a distance, but the intervening medium played no direct role.

Nothing comes from nothing, Nothing ever could.

Rogers and Hammerstein, The Sound of Music

This would seem to say that Newton had nothing to say about the vacuum, but his other major work, his Optiks, established particles as the elements of light rays. Such light particles travelled easily through vacuum, so the particle theory of light came down on the empty side of space.

1821 – Augustin Fresnel Luminiferous Aether

Today, we tend to think of Thomas Young as the chief proponent for the wave nature of light, going against the towering reputation of his own countryman Newton, and his courage and insights are admirable. But it was Augustin Fresnel who put mathematics to the theory. It was also Fresnel, working with his friend Francois Arago, who established that light waves are purely transverse.

For these contributions, Fresnel stands as one of the greatest physicists of the 1800’s. But his transverse light waves gave birth to one of the greatest red herrings of that century—the luminiferous aether. The argument went something like this, “if light is waves, then just as sound is oscillations of air, light must be oscillations of some medium that supports it – the luminiferous aether.” Arago searched for effects of this aether in his astronomical observations, but he didn’t see it, and Fresnel developed a theory of “partial aether drag” to account for Arago’s null measurement. Hippolyte Fizeau later confirmed the Fresnel “drag coefficient” in his famous measurement of the speed of light in moving water. (For the full story of Arago, Fresnel and Fizeau, see Chapter 2 of “Interference”. [1])

But the transverse character of light also required that this unknown medium must have some stiffness to it, like solids that support transverse elastic waves. This launched almost a century of alternative ideas of the aether that drew in such stellar actors as George Green, George Stokes and Augustin Cauchy with theories spanning from complete aether drag to zero aether drag with Fresnel’s partial aether drag somewhere in the middle.

1849 – Michael Faraday Field Theory

Micheal Faraday was one of the most intuitive physicists of the 1800’s. He worked by feel and mental images rather than by equations and proofs. He took nothing for granted, able to see what his experiments were telling him instead of looking only for what he expected.

This talent allowed him to see lines of force when he mapped out the magnetic field around a current-carrying wire. Physicists before him, including Ampere who developed a mathematical theory for the magnetic effects of a wire, thought only in terms of Newton’s action at a distance. All forces were central forces that acted in straight lines. Faraday’s experiments told him something different. The magnetic lines of force were circular, not straight. And they filled space. This realization led him to formulate his theory for the magnetic field.

Others at the time rejected this view, until William Thomson (the future Lord Kelvin) wrote a letter to Faraday in 1845 telling him that he had developed a mathematical theory for the field. He suggested that Faraday look for effects of fields on light, which Faraday found just one month later when he observed the rotation of the polarization of light when it propagated in a high-index material subject to a high magnetic field. This effect is now called Faraday Rotation and was one of the first experimental verifications of the direct effects of fields.

Nothing is more real than nothing.

Samuel Beckett

In 1949, Faraday stated his theory of fields in their strongest form, suggesting that fields in empty space were the repository of magnetic phenomena rather than magnets themselves [2]. He also proposed a theory of light in which the electric and magnetic fields induced each other in repeated succession without the need for a luminiferous aether.

1861 – James Clerk Maxwell Equations of Electromagnetism

James Clerk Maxwell pulled the various electric and magnetic phenomena together into a single grand theory, although the four succinct “Maxwell Equations” was condensed by Oliver Heaviside from Maxwell’s original 15 equations (written using Hamilton’s awkward quaternions) down to the 4 vector equations that we know and love today.

One of the most significant and most surprising thing to come out of Maxwell’s equations was the speed of electromagnetic waves that matched closely with the known speed of light, providing near certain proof that light was electromagnetic waves.

However, the propagation of electromagnetic waves in Maxwell’s theory did not rule out the existence of a supporting medium—the luminiferous aether. It was still not clear that fields could exist in a pure vacuum but might still be like the stress fields in solids.

Late in his life, just before he died, Maxwell pointed out that no measurement of relative speed through the aether performed on a moving Earth could see deviations that were linear in the speed of the Earth but instead would be second order. He considered that such second-order effects would be far to small ever to detect, but Albert Michelson had different ideas.

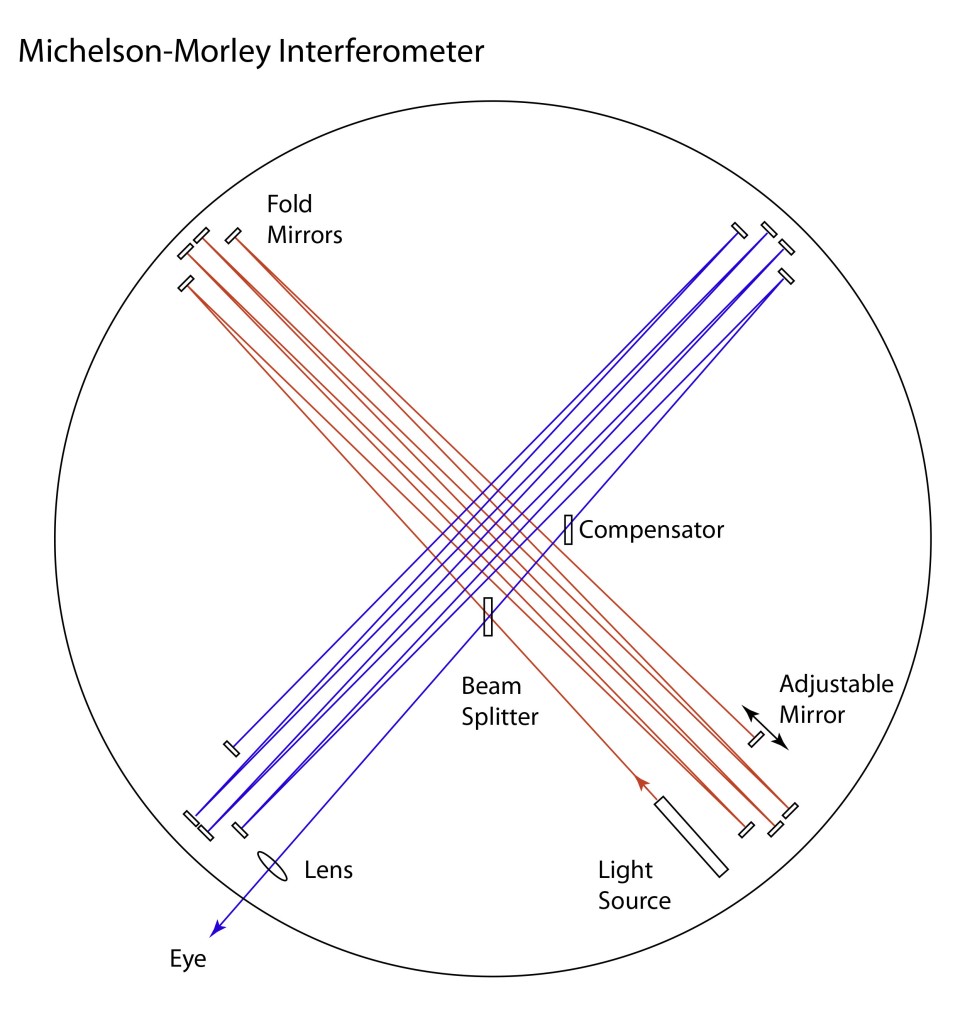

1887 – Albert Michelson Null Experiment

Albert Michelson was convinced of the existence of the luminiferous aether, and he was equally convinced that he could detect it. In 1880, working in the basement of the Potsdam Observatory outside Berlin, he operated his first interferometer in a search for evidence of the motion of the Earth through the aether. He had built the interferometer, what has come to be called a Michelson Interferometer, months earlier in the laboratory of Hermann von Helmholtz in the center of Berlin, but the footfalls of the horse carriages outside the building disturbed the measurements too much—Postdam was quieter.

But he could find no difference in his interference fringes as he oriented the arms of his interferometer parallel and orthogonal to the Earth’s motion. A simple calculation told him that his interferometer design should have been able to detect it—just barely—so the null experiment was a puzzle.

Seven years later, again in a basement (this time in a student dormitory at Western Reserve College in Cleveland, Ohio), Michelson repeated the experiment with an interferometer that was ten times more sensitive. He did this in collaboration with Edward Morley. But again, the results were null. There was no difference in the interference fringes regardless of which way he oriented his interferometer. Motion through the aether was undetectable.

(Michelson has a fascinating backstory, complete with firestorms (literally) and the Wild West and a moment when he was almost committed to an insane asylum against his will by a vengeful wife. To read all about this, see Chapter 4: After the Gold Rush in my recent book Interference (Oxford, 2023)).

The Michelson Morley experiment did not create the crisis in physics that it is sometimes credited with. They published their results, and the physics world took it in stride. Voigt and Fitzgerald and Lorentz and Poincaré toyed with various ideas to explain it away, but there had already been so many different models, from complete drag to no drag, that a few more theories just added to the bunch.

But they all had their heads in a haze. It took an unknown patent clerk in Switzerland to blow away the wisps and bring the problem into the crystal clear.

1905 – Albert Einstein Relativity

So much has been written about Albert Einstein’s “miracle year” of 1905 that it has lapsed into a form of physics mythology. Looking back, it seems like his own personal Big Bang, springing forth out of the vacuum. He published 5 papers that year, each one launching a new approach to physics on a bewildering breadth of problems from statistical mechanics to quantum physics, from electromagnetism to light … and of course, Special Relativity [3].

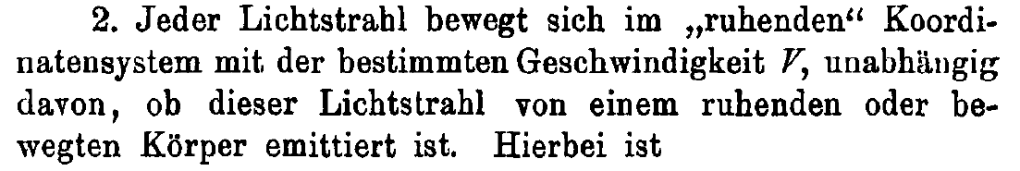

Whereas the others, Voigt and Fitzgerald and Lorentz and Poincaré, were trying to reconcile measurements of the speed of light in relative motion, Einstein just replaced all that musing with a simple postulate, his second postulate of relativity theory:

2. Any ray of light moves in the “stationary” system of co-ordinates with the determined velocity c, whether the ray be emitted by a stationary or by a moving body. Hence …

Albert Einstein, Annalen der Physik, 1905

And the rest was just simple algebra—in complete agreement with Michelson’s null experiment, and with Fizeau’s measurement of the so-called Fresnel drag coefficient, while also leading to the famous E = mc2 and beyond.

There is no aether. Electromagnetic waves are self-supporting in vacuum—changing electric fields induce changing magnetic fields that induce, in turn, changing electric fields—and so it goes.

The vacuum is vacuum—nothing! Except that it isn’t. It is still full of things.

1931 – P. A. M Dirac Antimatter

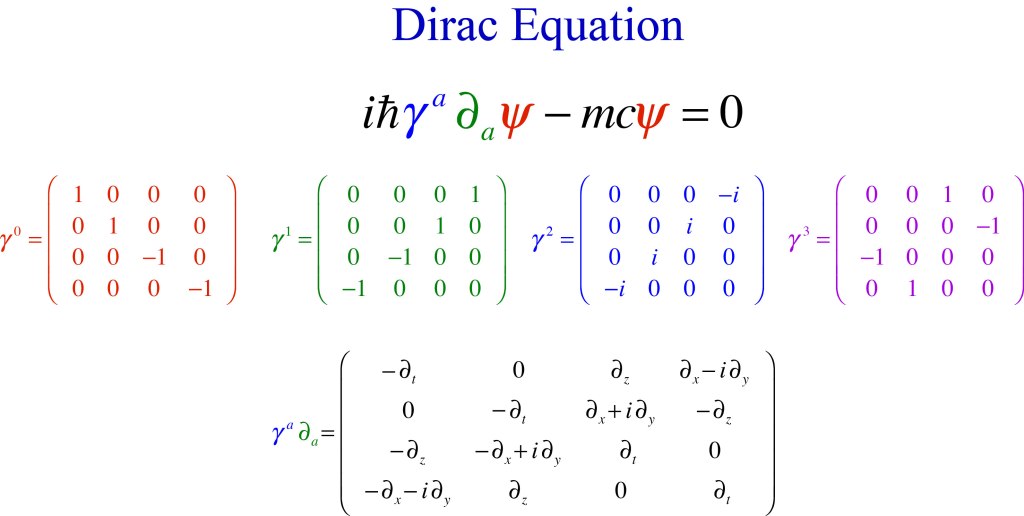

The Dirac equation is the famous end-product of P. A. M. Dirac’s search for a relativistic form of the Schrödinger equation. It replaces the asymmetric use in Schrödinger’s form of a second spatial derivative and a first time derivative with Dirac’s form using only first derivatives that are compatible with relativistic transformations [4].

One of the immediate consequences of this equation is a solution that has negative energy. At first puzzling and hard to interpret [5], Dirac eventually hit on the amazing proposal that these negative energy states are real particles paired with ordinary particles. For instance, the negative energy state associated with the electron was an anti-electron, a particle with the same mass as the electron, but with positive charge. Furthermore, because the anti-electron has negative energy and the electron has positive energy, these two particles can annihilate and convert their mass energy into the energy of gamma rays. This audacious proposal was confirmed by the American physicist Carl Anderson who discovered the positron in 1932.

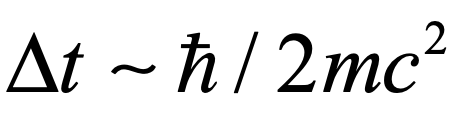

The existence of particles and anti-particles, combined with Heisenberg’s uncertainty principle, suggests that vacuum fluctuations can spontaneously produce electron-positron pairs that would then annihilate within a time related to the mass energy

Although this is an exceedingly short time (about 10-21 seconds), it means that the vacuum is not empty, but contains a frothing sea of particle-antiparticle pairs popping into and out of existence.

1938 – M. C. Escher Negative Space

Scientists are not the only ones who think about empty space. Artists, too, are deeply committed to a visual understanding of our world around us, and the uses of negative space in art dates back virtually to the first cave paintings. However, artists and art historians only talked explicitly in such terms since the 1930’s and 1940’s [6]. One of the best early examples of the interplay between positive and negative space was a print made by M. C. Escher in 1938 titled “Day and Night”.

1946 – Edward Purcell Modified Spontaneous Emission

In 1916 Einstein laid out the laws of photon emission and absorption using very simple arguments (his modus operandi) based on the principles of detailed balance. He discovered that light can be emitted either spontaneously or through stimulated emission (the basis of the laser) [7]. Once the nature of vacuum fluctuations was realized through the work of Dirac, spontaneous emission was understood more deeply as a form of stimulated emission caused by vacuum fluctuations. In the absence of vacuum fluctuations, spontaneous emission would be inhibited. Conversely, if vacuum fluctuations are enhanced, then spontaneous emission would be enhanced.

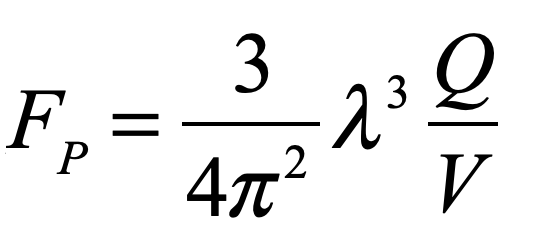

This effect was observed by Edward Purcell in 1946 through the observation of emission times of an atom in a RF cavity [8]. When the atomic transition was resonant with the cavity, spontaneous emission times were much faster. The Purcell enhancement factor is

where Q is the “Q” of the cavity, and V is the cavity volume. The physical basis of this effect is the modification of vacuum fluctuations by the cavity modes caused by interference effects. When cavity modes have constructive interference, then vacuum fluctuations are larger, and spontaneous emission is stimulated more quickly.

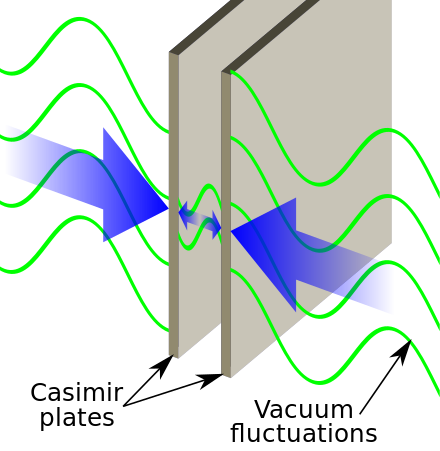

1948 – Hendrik Casimir Vacuum Force

Interference effects in a cavity affect the total energy of the system by excluding some modes which become inaccessible to vacuum fluctuations. This lowers the internal energy internal to a cavity relative to free space outside the cavity, resulting in a net “pressure” acting on the cavity. If two parallel plates are placed in close proximity, this would cause a force of attraction between them. The effect was predicted in 1948 by Hendrik Casimir [9], but it was not verified experimentally until 1997 by S. Lamoreaux at Yale University [10].

1949 – Shinichiro Tomonaga, Richard Feynman and Julian Schwinger QED

The physics of the vacuum in the years up to 1948 had been a hodge-podge of ad hoc theories that captured the qualitative aspects, and even some of the quantitative aspects of vacuum fluctuations, but a consistent theory was lacking until the work of Tomonaga in Japan, Feynman at Cornell and Schwinger at Harvard. Feynman and Schwinger both published their theory of quantum electrodynamics (QED) in 1949. They were actually scooped by Tomonaga, who had developed his theory earlier during WWII, but physics research in Japan had been cut off from the outside world. It was when Oppenheimer received a letter from Tomonaga in 1949 that the West became aware of his work. All three received the Nobel Prize for their work on QED in 1965. Precision tests of QED now make it one of the most accurately confirmed theories in physics.

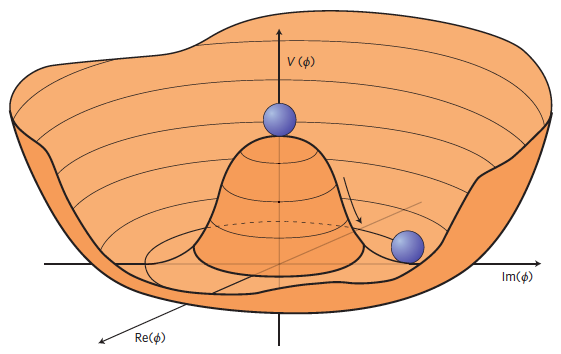

1964 – Peter Higgs and The Higgs

The Higgs particle, known as “The Higgs”, was the brain-child of Peter Higgs, Francois Englert and Gerald Guralnik in 1964. Higgs’ name became associated with the theory because of a response letter he wrote to an objection made about the theory. The Higg’s mechanism is spontaneous symmetry breaking in which a high-symmetry potential can lower its energy by distorting the field, arriving at a new minimum in the potential. This mechanism can allow the bosons that carry force to acquire mass (something the earlier Yang-Mills theory could not do).

Spontaneous symmetry breaking is a ubiquitous phenomenon in physics. It occurs in the solid state when crystals can lower their total energy by slightly distorting from a high symmetry to a low symmetry. It occurs in superconductors in the formation of Cooper pairs that carry supercurrents. And here it occurs in the Higgs field as the mechanism to imbues particles with mass .

The theory was mostly ignored for its first decade, but later became the core of theories of electroweak unification. The Large Hadron Collider (LHC) at Geneva was built to detect the boson, announced in 2012. Peter Higgs and Francois Englert were awarded the Nobel Prize in Physics in 2013, just one year after the discovery.

The Higgs field permeates all space, and distortions in this field around idealized massless point particles are observed as mass. In this way empty space becomes anything but.

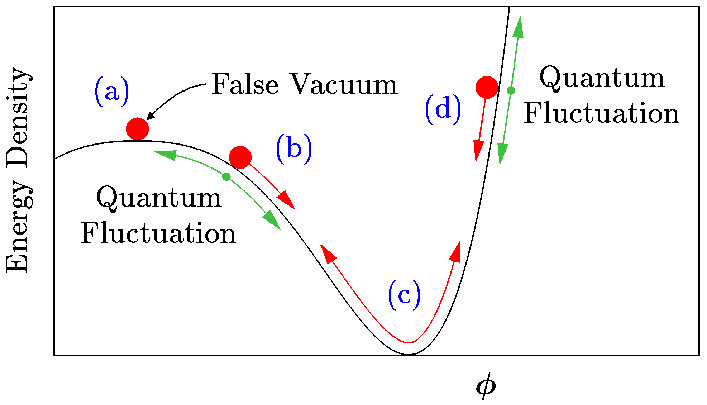

1981 – Alan Guth Inflationary Big Bang

Problems arose in observational cosmology in the 1970’s when it was understood that parts of the observable universe that should have been causally disconnected were in thermal equilibrium. This could only be possible if the universe were much smaller near the very beginning. In January of 1981, Alan Guth, then at Cornell University, realized that a rapid expansion from an initial quantum fluctuation could be achieved if an initial “false vacuum” existed in a positive energy density state (negative vacuum pressure). Such a false vacuum could relax to the ordinary vacuum, causing a period of very rapid growth that Guth called “inflation”. Equilibrium would have been achieved prior to inflation, solving the observational problem.Therefore, the inflationary model posits a multiplicities of different types of “vacuum”, and once again, simple vacuum is not so simple.

1998 – Saul Pearlmutter Dark Energy

Einstein didn’t make many mistakes, but in the early days of General Relativity he constructed a theoretical model of a “static” universe. A central parameter in Einstein’s model was something called the Cosmological Constant. By tuning it to balance gravitational collapse, he tuned the universe into a static Ithough unstable) state. But when Edwin Hubble showed that the universe was expanding, Einstein was proven incorrect. His Cosmological Constant was set to zero and was considered to be a rare blunder.

Fast forward to 1999, and the Supernova Cosmology Project, directed by Saul Pearlmutter, discovered that the expansion of the universe was accelerating. The simplest explanation was that Einstein had been right all along, or at least partially right, in that there was a non-zero Cosmological Constant. Not only is the universe not static, but it is literally blowing up. The physical origin of the Cosmological Constant is believed to be a form of energy density associated with the space of the universe. This “extra” energy density has been called “Dark Energy”, filling empty space.

Bottom Line

The bottom line is that nothing, i.e., the vacuum, is far from nothing. It is filled with a froth of particles, and energy, and fields, and potentials, and broken symmetries, and negative pressures, and who knows what else as modern physics has been much ado about this so-called nothing, almost more than it has been about everything else.

References:

[1] David D. Nolte, Interference: The History of Optical Interferometry and the Scientists Who Tamed Light (Oxford University Press, 2023)

[2] L. Peirce Williams in “Faraday, Michael.” Complete Dictionary of Scientific Biography, vol. 4, Charles Scribner’s Sons, 2008, pp. 527-540.

[3] A. Einstein, “On the electrodynamics of moving bodies,” Annalen Der Physik 17, 891-921 (1905).

[4] Dirac, P. A. M. (1928). “The Quantum Theory of the Electron”. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences. 117 (778): 610–624.

[5] Dirac, P. A. M. (1930). “A Theory of Electrons and Protons”. Proceedings of the Royal Society A: Mathematical, Physical and Engineering Sciences. 126 (801): 360–365.

[6] Nikolai M Kasak, Physical Art: Action of positive and negative space, (Rome, 1947/48) [2d part rev. in 1955 and 1956].

[7] A. Einstein, “Strahlungs-Emission un -Absorption nach der Quantentheorie,” Verh. Deutsch. Phys. Ges. 18, 318 (1916).

[8] Purcell, E. M. (1946-06-01). “Proceedings of the American Physical Society: Spontaneous Emission Probabilities at Ratio Frequencies”. Physical Review. American Physical Society (APS). 69 (11–12): 681.

[9] Casimir, H. B. G. (1948). “On the attraction between two perfectly conducting plates”. Proc. Kon. Ned. Akad. Wet. 51: 793.

[10] Lamoreaux, S. K. (1997). “Demonstration of the Casimir Force in the 0.6 to 6 μm Range”. Physical Review Letters. 78 (1): 5–8.